Uncertainty Propagation¶

The UncertaintyPropagation module allows for propagating uncertainty \(x \sim \mathcal{N}(u,\Sigma_u)\) through the Gaussian process to estimate the output uncertainty of the simulation. This is based on Girard’s thesis [1].

Note

The following explanation is an excerpt from my paper [2].

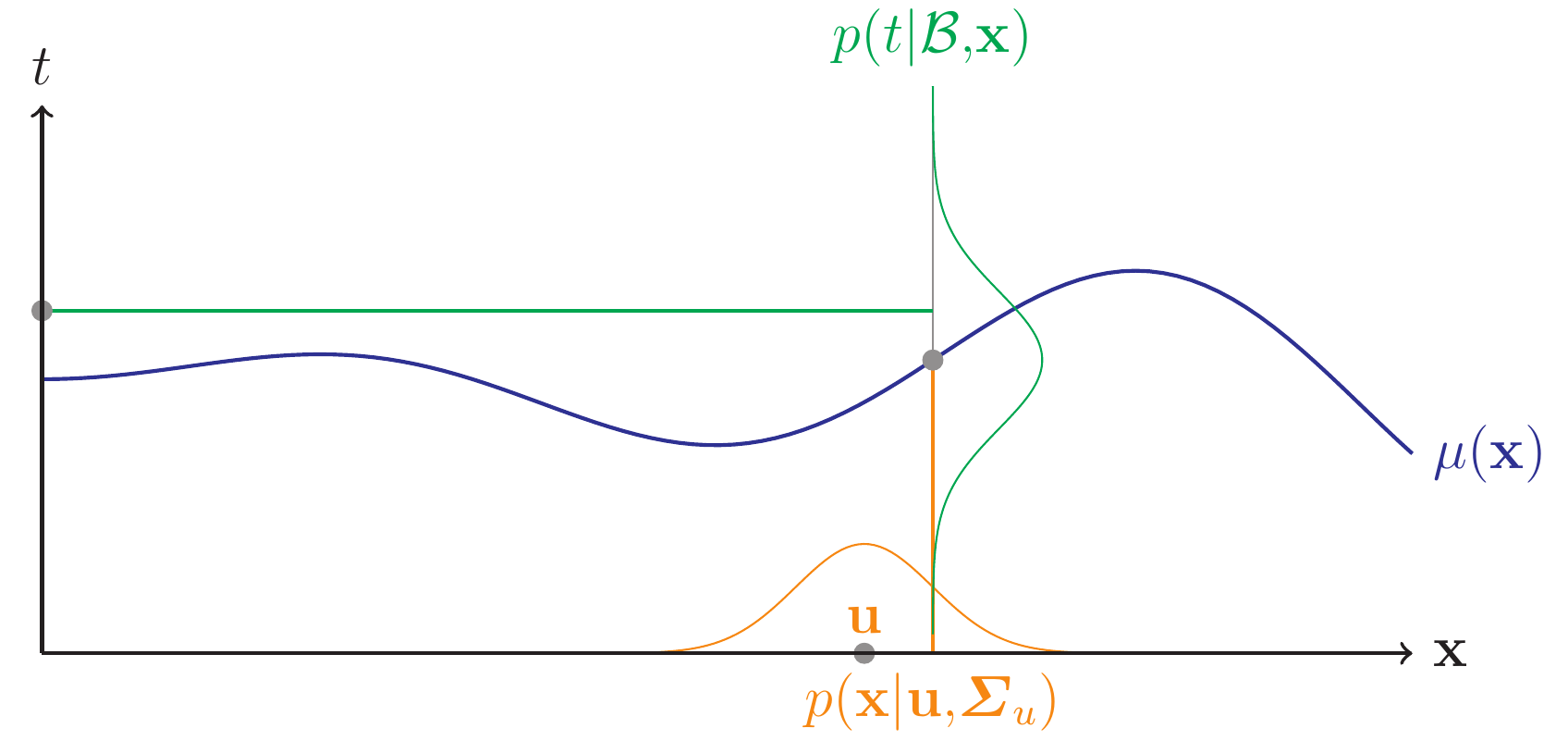

We assume that the input parameters will be estimated from real world data using a maximum likelihood approach. Under this assumption, we can represent the uncertainty about an input parameter as a normal distribution. For an uncertain input \(x \sim \mathcal{N}(u,\Sigma_u)\), the output distribution can be written as:

This formula is illustrated in the following figure:

Here, \(\Sigma_u\) is the covariance matrix of the uncertain input and incorporates the uncertainty and dependencies between the input parameters. As the distribution \(p(y|\mathcal{B},u,\Sigma_u)\) is hard to determine analytically, Girard approximates its mean and variance:

\(C'\) is the Gradient and \(C''\) is the Hessian of the covariance function. In case of the squared exponential, \(C''(u,u)\) equals 0. As this approach makes no special assumptions about the covariance function, other functions can be plugged into this method as long as they are twice differentiable.

We use this approximation for the output uncertainty \(v(u,\Sigma_u)\) as a basis for our inverse uncertainty propagation. At this point, the uncertainty propagation uses two layers of approximation: First, the simulation is approximated by a Gaussian process and second, the uncertainty propagation is done using an approximate formula.

Using this approach, we can clearly distinguish between aleatory, epistemic and code uncertainty. Aleatory uncertainty is represented by \(v_t\), code uncertainty is represented by \(\sigma^2(u)-v_t\) and epistemic uncertainty is \(v(u,\Sigma_u) - \sigma^2(u)\).

Usage: See Getting Started

References¶

| [1] | Girard, A. Approximate Methods for Propagation of Uncertainty with Gaussian Process Models, University of Glasgow, 2004 |

| [2] | Baumgaertel, P.; Endler, G.; Wahl, A. M. & Lenz, R. Inverse Uncertainty Propagation for Demand Driven Data Acquisition, Proceedings of the 2014 Winter Simulation Conference, IEEE Press, 2014, 710-721 (https://www6.cs.fau.de/publications/public/2014/WinterSim2014_baumgaertel.pdf) |