| Home | Trees | Indices | Help |

|

|---|

|

|

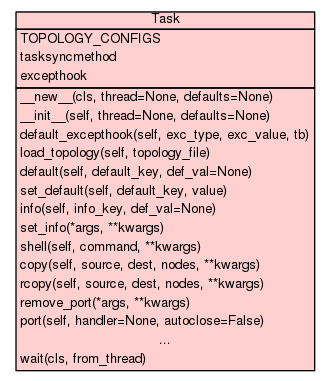

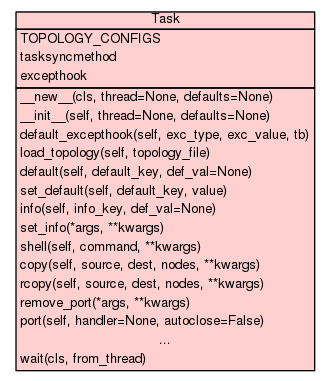

The Task class defines an essential ClusterShell object which aims to

execute commands in parallel and easily get their results.

More precisely, a Task object manages a coordinated (ie. with respect of

its current parameters) collection of independent parallel Worker objects.

See ClusterShell.Worker.Worker for further details on ClusterShell Workers.

Always bound to a specific thread, a Task object acts like a "thread

singleton". So most of the time, and even more for single-threaded

applications, you can get the current task object with the following

top-level Task module function:

>>> task = task_self()

However, if you want to create a task in a new thread, use:

>>> task = Task()

To create or get the instance of the task associated with the thread

object thr (threading.Thread):

>>> task = Task(thread=thr)

To submit a command to execute locally within task, use:

>>> task.shell("/bin/hostname")

To submit a command to execute to some distant nodes in parallel, use:

>>> task.shell("/bin/hostname", nodes="tiger[1-20]")

The previous examples submit commands to execute but do not allow result

interaction during their execution. For your program to interact during

command execution, it has to define event handlers that will listen for

local or remote events. These handlers are based on the EventHandler

class, defined in ClusterShell.Event. The following example shows how to

submit a command on a cluster with a registered event handler:

>>> task.shell("uname -r", nodes="node[1-9]", handler=MyEventHandler())

Run task in its associated thread (will block only if the calling thread is

the task associated thread):

>>> task.resume()

or:

>>> task.run()

You can also pass arguments to task.run() to schedule a command exactly

like in task.shell(), and run it:

>>> task.run("hostname", nodes="tiger[1-20]", handler=MyEventHandler())

A common need is to set a maximum delay for command execution, especially

when the command time is not known. Doing this with ClusterShell Task is

very straighforward. To limit the execution time on each node, use the

timeout parameter of shell() or run() methods to set a delay in seconds,

like:

>>> task.run("check_network.sh", nodes="tiger[1-20]", timeout=30)

You can then either use Task's iter_keys_timeout() method after execution

to see on what nodes the command has timed out, or listen for ev_timeout()

events in your event handler.

To get command result, you can either use Task's iter_buffers() method for

standard output, iter_errors() for standard error after command execution

(common output contents are automatically gathered), or you can listen for

ev_read() and ev_error() events in your event handler and get live command

output.

To get command return codes, you can either use Task's iter_retcodes(),

node_retcode() and max_retcode() methods after command execution, or

listen for ev_hup() events in your event handler.

|

|||

|

_SyncMsgHandler Special task control port event handler. |

|||

|

tasksyncmethod Class encapsulating a function that checks if the calling task is running or is the current task, and allowing it to be used as a decorator making the wrapped task method thread-safe. |

|||

|

_SuspendCondition Special class to manage task suspend condition. |

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

|||

|

Inherited from |

|||

|

|||

|

|||

|

|||

| a new object with type S, a subtype of T |

|

||

|

|||

TOPOLOGY_CONFIGS = |

|||

_tasks = |

|||

_taskid_max = 0

|

|||

_task_lock = threading.Lock()

|

|||

|

|||

| excepthook | |||

|

Inherited from |

|||

|

|||

For task bound to a specific thread, this class acts like a "thread singleton", so new style class is used and new object are only instantiated if needed.

|

Initialize a Task, creating a new non-daemonic thread if needed.

|

Default excepthook for a newly Task. When an exception is raised and uncaught on Task thread, excepthook is called, which is default_excepthook by default. Once excepthook overriden, you can still call default_excepthook if needed. |

Default excepthook for a newly Task. When an exception is raised and uncaught on Task thread, excepthook is called, which is default_excepthook by default. Once excepthook overriden, you can still call default_excepthook if needed. |

Load propagation topology from provided file. On success, task.topology is set to a corresponding TopologyTree instance. On failure, task.topology is left untouched and a TopologyError exception is raised. |

Return per-task value for key from the "default" dictionary. See set_default() for a list of reserved task default_keys. |

Set task value for specified key in the dictionary "default". Users may store their own task-specific key, value pairs using this method and retrieve them with default(). Task default_keys are:

Threading considerationsUnlike set_info(), when called from the task's thread or not, set_default() immediately updates the underlying dictionary in a thread-safe manner. This method doesn't wake up the engine when called. |

Return per-task information. See set_info() for a list of reserved task info_keys. |

Set task value for a specific key information. Key, value pairs can be passed to the engine and/or workers. Users may store their own task-specific info key, value pairs using this method and retrieve them with info(). The following example changes the fanout value to 128: >>> task.set_info('fanout', 128) The following example enables debug messages: >>> task.set_info('debug', True) Task info_keys are:

Threading considerationsUnlike set_default(), the underlying info dictionary is only modified from the task's thread. So calling set_info() from another thread leads to queueing the request for late apply (at run time) using the task dispatch port. When received, the request wakes up the engine when the task is running and the info dictionary is then updated.

|

Schedule a shell command for local or distant parallel execution. This essential method creates a local or remote Worker (depending on the presence of the nodes parameter) and immediately schedules it for execution in task's runloop. So, if the task is already running (ie. called from an event handler), the command is started immediately, assuming current execution contraintes are met (eg. fanout value). If the task is not running, the command is not started but scheduled for late execution. See resume() to start task runloop. The following optional parameters are passed to the underlying local or remote Worker constructor:

Local usage:

task.shell(command [, key=key] [, handler=handler]

[, timeout=secs] [, autoclose=enable_autoclose]

[, stderr=enable_stderr])

Distant usage:

task.shell(command, nodes=nodeset [, handler=handler]

[, timeout=secs], [, autoclose=enable_autoclose]

[, tree=None|False|True] [, remote=False|True]

[, stderr=enable_stderr])

Example: >>> task = task_self() >>> task.shell("/bin/date", nodes="node[1-2345]") >>> task.resume() |

Add an EnginePort instance to Engine (private method).

|

Close and remove a port from task previously created with port().

|

Create a new task port. A task port is an abstraction object to deliver messages reliably between tasks. Basic rules:

If handler is set to a valid EventHandler object, the port is a send-once port, ie. a message sent to this port generates an ev_msg event notification issued the port's task. If handler is not set, the task can only receive messages on the port by calling port.msg_recv(). |

Create a timer bound to this task that fires at a preset time in the future by invoking the ev_timer() method of `handler' (provided EventHandler object). Timers can fire either only once or repeatedly at fixed time intervals. Repeating timers can also have their next firing time manually adjusted. The mandatory parameter `fire' sets the firing delay in seconds. The optional parameter `interval' sets the firing interval of the timer. If not specified, the timer fires once and then is automatically invalidated. Time values are expressed in second using floating point values. Precision is implementation (and system) dependent. The optional parameter `autoclose', if set to True, creates an "autoclosing" timer: it will be automatically invalidated as soon as all other non-autoclosing task's objects (workers, ports, timers) have finished. Default value is False, which means the timer will retain task's runloop until it is invalidated. Return a new EngineTimer instance. See ClusterShell.Engine.Engine.EngineTimer for more details. |

Add a timer to task engine (thread-safe).

|

Schedule a worker for execution, ie. add worker in task running loop. Worker will start processing immediately if the task is running (eg. called from an event handler) or as soon as the task is started otherwise. Only useful for manually instantiated workers, for example: >>> task = task_self() >>> worker = WorkerSsh("node[2-3]", None, 10, command="/bin/ls") >>> task.schedule(worker) >>> task.resume()

|

Resume task. If task is task_self(), workers are executed in the calling thread so this method will block until all (non-autoclosing) workers have finished. This is always the case for a single-threaded application (eg. which doesn't create other Task() instance than task_self()). Otherwise, the current thread doesn't block. In that case, you may then want to call task_wait() to wait for completion. Warning: the timeout parameter can be used to set an hard limit of task execution time (in seconds). In that case, a TimeoutError exception is raised if this delay is reached. Its value is 0 by default, which means no task time limit (TimeoutError is never raised). In order to set a maximum delay for individual command execution, you should use Task.shell()'s timeout parameter instead. |

With arguments, it will schedule a command exactly like a Task.shell() would have done it and run it. This is the easiest way to simply run a command. >>> task.run("hostname", nodes="foo") Without argument, it starts all outstanding actions. It behaves like Task.resume(). >>> task.shell("hostname", nodes="foo") >>> task.shell("hostname", nodes="bar") >>> task.run() When used with a command, you can set a maximum delay of individual command execution with the help of the timeout parameter (see Task.shell's parameters). You can then listen for ev_timeout() events in your Worker event handlers, or use num_timeout() or iter_keys_timeout() afterwards. But, when used as an alias to Task.resume(), the timeout parameter sets an hard limit of task execution time. In that case, a TimeoutError exception is raised if this delay is reached. |

Suspend request received.

|

Suspend task execution. This method may be called from another task (thread-safe). The function returns False if the task cannot be suspended (eg. it's not running), or returns True if the task has been successfully suspended. To resume a suspended task, use task.resume(). |

Abort request received.

|

Abort a task. Aborting a task removes (and stops when needed) all workers. If optional parameter kill is True, the task object is unbound from the current thread, so calling task_self() creates a new Task object. |

Process a new message into Task's MsgTree that is coming from:

|

Iterate over timed out keys (ie. nodes) for a specific worker. |

Get buffer for a specific key. When the key is associated to multiple workers, the resulting buffer will contain all workers content that may overlap. This method returns an empty buffer if key is not found in any workers. |

Get buffer for a specific key. When the key is associated to multiple workers, the resulting buffer will contain all workers content that may overlap. This method returns an empty buffer if key is not found in any workers. |

Get error buffer for a specific key. When the key is associated to multiple workers, the resulting buffer will contain all workers content that may overlap. This method returns an empty error buffer if key is not found in any workers. |

Get error buffer for a specific key. When the key is associated to multiple workers, the resulting buffer will contain all workers content that may overlap. This method returns an empty error buffer if key is not found in any workers. |

Return return code for a specific key. When the key is associated to multiple workers, return the max return code from these workers. Raises a KeyError if key is not found in any finished workers. |

Return return code for a specific key. When the key is associated to multiple workers, return the max return code from these workers. Raises a KeyError if key is not found in any finished workers. |

Get max return code encountered during last run

or None in the following cases:

- all commands timed out,

- no command was executed.

How retcodes work

=================

If the process exits normally, the return code is its exit

status. If the process is terminated by a signal, the return

code is 128 + signal number.

|

Iterate over buffers, returns a tuple (buffer, keys). For remote workers (Ssh), keys are list of nodes. In that case, you should use NodeSet.fromlist(keys) to get a NodeSet instance (which is more convenient and efficient): Optional parameter match_keys add filtering on these keys. Usage example: >>> for buffer, nodelist in task.iter_buffers(): ... print NodeSet.fromlist(nodelist) ... print buffer |

Iterate over error buffers, returns a tuple (buffer, keys). See iter_buffers(). |

Iterate over return codes, returns a tuple (rc, keys). Optional parameter match_keys add filtering on these keys. How retcodes workIf the process exits normally, the return code is its exit status. If the process is terminated by a signal, the return code is 128 + signal number. |

Return the number of timed out "keys" (ie. nodes). |

Iterate over timed out keys (ie. nodes). |

Class method that blocks calling thread until all tasks have finished (from a ClusterShell point of view, for instance, their task.resume() return). It doesn't necessarly mean that associated threads have finished. |

Get propagation channel for gateway (create one if needed).

Use self.gateways dictionary that allows lookup like:

gateway => (worker channel, set of metaworkers)

|

Release propagation channel associated to gateway. Lookup by gateway, decref associated metaworker set and release channel worker if needed. |

|

|||

TOPOLOGY_CONFIGS

|

|

|||

excepthook

|

| Home | Trees | Indices | Help |

|

|---|

| Generated by Epydoc 3.0.1 on Wed Dec 21 14:07:54 2016 | http://epydoc.sourceforge.net |