dispel4py.examples.graph_testing package¶

Submodules¶

dispel4py.examples.graph_testing.delayed_pipeline module¶

This is a dispel4py graph which produces a pipeline workflow with one producer node (prod) and 2 consumer nodes. The second consumer node delays the output by a fixed time and records the average processing time.

Execution:

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> python -m dispel4py.worker_mpi\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When [-i number of interations/runs] is not indicated, the graph is executed once by default.

For example:

mpiexec -n 6 dispel4py mpi \ dispel4py.examples.graph_testing.delayed_pipelineNote

Each node in the graph is executed as a separate MPI process. This graph has 3 nodes. For this reason we need at least 3 MPI processes to execute it.

Output:

Processes: {'TestDelayOneInOneOut2': [2, 3], 'TestProducer0': [4], 'TestOneInOneOut1': [0, 1]} TestProducer0 (rank 4): Processed 10 iterations. TestOneInOneOut1 (rank 1): Processed 5 iterations. TestOneInOneOut1 (rank 0): Processed 5 iterations. TestDelayOneInOneOut2 (rank 3): Average processing time: 1.00058307648 TestDelayOneInOneOut2 (rank 3): Processed 5 iterations. TestDelayOneInOneOut2 (rank 2): Average processing time: 1.00079641342 TestDelayOneInOneOut2 (rank 2): Processed 5 iterations.

-

dispel4py.examples.graph_testing.delayed_pipeline.cons1= <dispel4py.examples.graph_testing.testing_PEs.TestOneInOneOut object>¶ adding a processing timer

-

dispel4py.examples.graph_testing.delayed_pipeline.cons2= <dispel4py.new.monitoring.TestDelayOneInOneOut object>¶ important: this is the graph_variable

dispel4py.examples.graph_testing.group_by module¶

This is a dispel4py graph that shows the group-by data pattern to count words.

-

dispel4py.examples.graph_testing.group_by.testGrouping()¶ Creates the test graph.

dispel4py.examples.graph_testing.grouping_alltoone module¶

This is a dispel4py graph which produces a workflow with a pipeline

in which the producer node prod sends data to the consumer node cons1

which then sends data to node cons2.

Note that in this graph we have defined several instances of the cons1 and

cons2 nodes and all the instances of the cons1 node are sending data to only

one instance of cons2 node.

This type of grouping is called global in dispel4py (all to one).

It can be executed with MPI and STORM.

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py mpi\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When <-i number of interations/runs> is not indicated, the graph is executed once by default.

For example:

mpiexec -n 11 dispel4py mpi \ dispel4py.examples.graph_testing.grouping_alltoone -i 10Note

Each node in the graph is executed as a separate MPI process. This graph has 3 nodes. For this reason we need at least 3 MPI processes to execute it.

Output:

Processing 10 iterations. Processes: {'TestProducer0': [0], 'TestOneInOneOut2': [6, 7, 8, 9, 10], 'TestOneInOneOut1': [1, 2, 3, 4, 5]} TestProducer0 (rank 0): Processed 10 iterations. TestOneInOneOut1 (rank 1): Processed 2 iterations. TestOneInOneOut1 (rank 2): Processed 2 iterations. TestOneInOneOut1 (rank 4): Processed 2 iterations. TestOneInOneOut1 (rank 5): Processed 2 iterations. TestOneInOneOut1 (rank 3): Processed 2 iterations. TestOneInOneOut2 (rank 7): Processed 0 iterations. TestOneInOneOut2 (rank 8): Processed 0 iterations. TestOneInOneOut2 (rank 9): Processed 0 iterations. TestOneInOneOut2 (rank 6): Processed 10 iterations. TestOneInOneOut2 (rank 10): Processed 0 iterations.Note that only one instance of the consumer node

TestOneInOneOut2(rank 6) received all the input blocks from the previous PE, as the grouping has been defined as global.STORM:

-

dispel4py.examples.graph_testing.grouping_alltoone.testAlltoOne()¶ Creates a graph with two consumer nodes and a global grouping.

Return type: the created graph

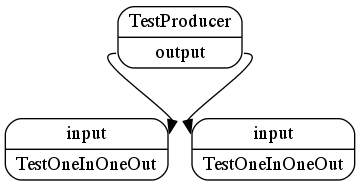

dispel4py.examples.graph_testing.grouping_onetoall module¶

This is a dispel4py graph which produces a workflow which copies the data

(from node prod) to one node (cons).

Note, that in this graph, we have decided to assign two processes to instances of the same PE:

cons = t.TestOneInOneOut()

cons.numprocesses=2

Another interesting point in this example is how to define the different types of groupings. In this example we have:

cons.inputconnections['input']['grouping'] = 'all'

which means that the prod node sends copies of its output data to all the

connected instances.

For that reason, the node cons has to specify the input grouping ‘all’ to

the connection.

In grouping_split_merge, we use another type of

grouping, which is group by. However, in that case, the grouping type is

defined by the PE class (WordCounter) which means it applies to all instances

of that class (unless it is explicitly overridden by an instance as we did

above).

If you compare this graph with teecopy these

look quite similar. However, they do different things.

In this example, we have two instances of the same PE and the node prod

sends the same data to both instances whereas in

teecopy the node prod sends the same data

to two different PEs.

It can be executed with MPI and STORM.

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> python -m dispel4py.worker_mpi\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When <-i number of interations/runs> is not indicated, the graph is executed once by default.

For example:

mpiexec -n 3 python -m dispel4py.worker_mpi\ dispel4py.examples.graph_testing.grouping_onetoallNote

Each node in the graph is executed as a separate MPI process. This graph has 3 nodes. For this reason we need at least 3 MPI processes to execute it.

Output:

Processing 1 iterations Processes: {'TestProducer0': [2], 'TestOneInOneOut1': [0, 1]} TestOneInOneOut1 (rank 0): I'm a bolt TestOneInOneOut1 (rank 1): I'm a bolt TestProducer0 (rank 2): I'm a spout Rank 2: Sending terminate message to [0, 1] TestProducer0 (rank 2): Processed 1 input block(s) TestProducer0 (rank 2): Completed. TestOneInOneOut1 (rank 1): Processed 1 input block(s) TestOneInOneOut1 (rank 1): Completed. TestOneInOneOut1 (rank 0): Processed 1 input block(s) TestOneInOneOut1 (rank 0): Completed.STORM:

-

dispel4py.examples.graph_testing.grouping_onetoall.testOnetoAll()¶

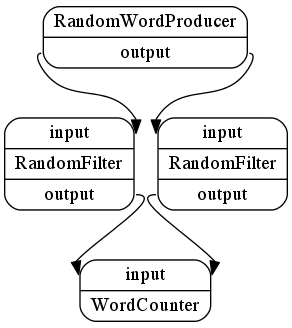

dispel4py.examples.graph_testing.grouping_split_merge module¶

This is a dispel4py graph which produces a workflow that sends copies of the output data from the producer node (words) to two nodes (filter1 and filter2), and the outputs of those two filters are merged in the last node (count).

It can be executed with MPI and STORM.

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> python -m dispel4py.worker_mpi\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When <-i number of interations/runs> is not indicated, the graph is executed once by default.

For example:

mpiexec -n 4 python -m dispel4py.worker_mpi\ dispel4py.examples.graph_testing.grouping_split_mergeNote

Each node in the graph is executed as a separate MPI process. This graph has 4 nodes. For this reason we need at least 4 MPI processes to execute it.

Output:

Processing 1 iterations Processes: {'RandomFilter2': [3], 'WordCounter3': [1], 'RandomFilter1': [0], 'RandomWordProducer0': [2]} RandomFilter1 (rank 0): I'm a bolt RandomWordProducer0 (rank 2): I'm a spout WordCounter3 (rank 1): I'm a bolt Rank 2: Sending terminate message to [0] Rank 2: Sending terminate message to [3] RandomWordProducer0 (rank 2): Processed 1 input block(s) RandomWordProducer0 (rank 2): Completed. RandomFilter2 (rank 3): I'm a bolt Rank 3: Sending terminate message to [1] RandomFilter2 (rank 3): Processed 1 input block(s) RandomFilter2 (rank 3): Completed. Rank 0: Sending terminate message to [1] RandomFilter1 (rank 0): Processed 1 input block(s) RandomFilter1 (rank 0): Completed. WordCounter3 (rank 1): Processed 2 input block(s) WordCounter3 (rank 1): Completed.Note

As those PEs are filtering randomly the output could be completely different.

-

dispel4py.examples.graph_testing.grouping_split_merge.testGrouping()¶ Creates the test graph.

dispel4py.examples.graph_testing.large_teecopy module¶

This is a dispel4py graph which produces a workflow that copies the data (from node prod) to two nodes (cons2 and cons3). This example can be used to process a large number of data blocks for testing.

It can be executed with MPI and STORM.

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs'] [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When [-i number of interations/runs] is not indicated, the graph is executed once by default. Where <number_of_blocks> is the number of blocks produced by the source PE in each iteration.

For example:

mpiexec -n 4 dispel4py mpi \ dispel4py.examples.graph_testing.large_teecopy 1000000Note

Each node in the graph is executed as a separate MPI process. This graph has 4 nodes. For this reason we need at least 4 MPI processes to execute it.

Output:

Processing 1 iterations Processes: {'TestProducer0': [0], 'TestOneInOneOut2': [2], 'TestOneInOneOut1': [1]} TestProducer0 (rank 0): I'm a spout Rank 0: Sending terminate message to [1] Rank 0: Sending terminate message to [2] TestProducer0 (rank 0): Processed 1 input block(s) TestProducer0 (rank 0): Completed. TestOneInOneOut2 (rank 2): I'm a bolt TestOneInOneOut2 (rank 2): Processed 1 input block(s) TestOneInOneOut2 (rank 2): Completed. TestOneInOneOut1 (rank 1): I'm a bolt TestOneInOneOut1 (rank 1): Processed 1 input block(s) TestOneInOneOut1 (rank 1): Completed

-

dispel4py.examples.graph_testing.large_teecopy.testTee()¶ Creates a graph with two consumer nodes and a tee connection.

Return type: the created graph

dispel4py.examples.graph_testing.multi_producer module¶

dispel4py.examples.graph_testing.parallel_pipeline module¶

This is a dispel4py graph where each MPI process computes a partition of the

workflow instead of a PE instance. This happens automatically when the graph

has more nodes than MPI processes.

In terms of internal execution, the user has control which parts of the graph

are distributed to each MPI process.

See partition_parallel_pipeline on how to specify

the partitioning.

It can be executed with MPI and STORM.

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py mpi\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When <-i number of interations/runs> is not indicated, the graph is executed once by default.

For example:

mpiexec -n 3 dispel4py mpi dispel4py.examples.parallel_pipeline -i 10

Note

To force the partitioning the graph must have more nodes than available MPI processes. This graph has 4 nodes and we use 3 MPI processes to execute it.

Output:

Processing 10 iterations Graph is too large for MPI job size: 4 > 3. Start simple processing. Partitions: [TestProducer0], [TestOneInOneOut1, TestOneInOneOut2, TestOneInOneOut3] Processes: {'GraphWrapperPE5': [1, 2], 'GraphWrapperPE4': [0]} GraphWrapperPE4 (rank 0): I'm a spout GraphWrapperPE5 (rank 1): I'm a bolt Rank 0: Sending terminate message to [1, 2] GraphWrapperPE4 (rank 0): Processed 10 input block(s) GraphWrapperPE4 (rank 0): Completed. GraphWrapperPE5 (rank 1): Processed 5 input block(s) GraphWrapperPE5 (rank 1): Completed. GraphWrapperPE5 (rank 2): I'm a bolt GraphWrapperPE5 (rank 2): Processed 5 input block(s) GraphWrapperPE5 (rank 2): Completed.

-

dispel4py.examples.graph_testing.parallel_pipeline.testParallelPipeline()¶ Creates a graph with 4 nodes.

Return type: the created graph

dispel4py.examples.graph_testing.partition_parallel_pipeline module¶

This graph is a modification of the

parallel_pipeline example,

showing how the user can specify how the graph is going to be partitioned

into MPI processes.

In this example we are specifying that one MPI process is executing the

pipeline of nodes prod, cons1 and cons2 and the other MPI

processes are executing the remaining node cons3:

graph.partitions = [ [prod, cons1, cons2], [cons3] ]

It can be executed with MPI and Storm. Storm will ignore the partition information.

Execution:

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py mpi\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When [-i number of interations/runs] is not indicated, the graph is executed once by default.

For example:

mpiexec -n 3 python -m dispel4py.worker_mpi \ dispel4py.examples.graph_testing.partition_parallel_pipeline -i 10Output:

Partitions: [TestProducer0, TestOneInOneOut1, TestOneInOneOut2], [TestOneInOneOut3] Processes: {'GraphWrapperPE5': [0, 1], 'GraphWrapperPE4': [2]} GraphWrapperPE5 (rank 0): I'm a bolt GraphWrapperPE5 (rank 1): I'm a bolt GraphWrapperPE4 (rank 2): I'm a spout Rank 2: Sending terminate message to [0, 1] GraphWrapperPE4 (rank 2): Processed 10 input block(s) GraphWrapperPE4 (rank 2): Completed. GraphWrapperPE5 (rank 1): Processed 5 input block(s) GraphWrapperPE5 (rank 1): Completed. GraphWrapperPE5 (rank 0): Processed 5 input block(s) GraphWrapperPE5 (rank 0): Completed.

-

dispel4py.examples.graph_testing.partition_parallel_pipeline.testParallelPipeline()¶ Creates the parallel pipeline graph with partitioning information.

Return type: the created graph

dispel4py.examples.graph_testing.pipeline_composite module¶

This is a dispel4py graph which produces a pipeline workflow with one producer node and a chain of functions that process the data.

Execution:

Simple processing:

Execute the sequential mapping as follows:

dispel4py simple dispel4py.examples.graph_testing.pipeline_composite\ [-i number of iterations]By default, if the number of iterations is not specified, the graph is executed once.

For example:

dispel4py simple dispel4py.examples.graph_testing.pipeline_composite

Output:

Processing 1 iteration. Inputs: {'TestProducer4': 1} SimplePE: Processed 1 iteration. Outputs: {'PE_subtract3': {'output': [5]}}MPI:

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py mpi\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When [-i number of interations/runs] is not indicated, the graph is executed once by default.

For example:

mpiexec -n 5 dispel4py mpi \ dispel4py.examples.graph_testing.pipeline_compositeNote

Each node in the graph is executed as a separate MPI process. This graph has 5 nodes (4 function PEs and one producer). For this reason we need at least 5 MPI processes to execute it.

Output:

Processing 1 iteration. Processes: {'PE_addTwo0': [2], 'TestProducer4': [0], 'PE_subtract3': [4], 'PE_multiplyByFour1': [1], 'PE_divideByTwo2': [3]} TestProducer4 (rank 0): Processed 1 iteration. PE_addTwo0 (rank 2): Processed 1 iteration. PE_multiplyByFour1 (rank 1): Processed 1 iteration. PE_divideByTwo2 (rank 3): Processed 1 iteration. PE_subtract3 (rank 4): Processed 1 iteration.STORM:

From the dispel4py directory launch the Storm submission client:

dispel4py storm dispel4py.examples.graph_testing.pipeline_composite\ -m remote

-

dispel4py.examples.graph_testing.pipeline_composite.addTwo(data)¶ Returns 2 + data.

-

dispel4py.examples.graph_testing.pipeline_composite.divideByTwo(data)¶ Returns data/2.

-

dispel4py.examples.graph_testing.pipeline_composite.multiplyByFour(data)¶ Returns 4 * data.

-

dispel4py.examples.graph_testing.pipeline_composite.subtract(data, n)¶ Returns data - n.

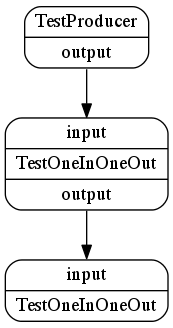

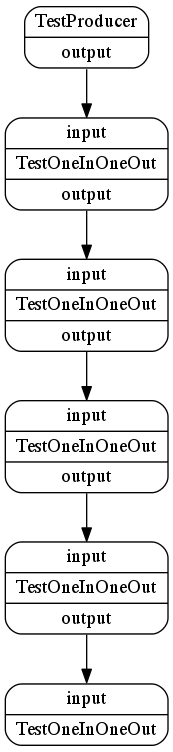

dispel4py.examples.graph_testing.pipeline_test module¶

This is a dispel4py graph which produces a pipeline workflow with one producer node (prod) and 5 consumer nodes. It can be executed with MPI and STORM.

Execution:

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py mpi\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When [-i number of interations/runs] is not indicated, the graph is executed once by default.

For example:

mpiexec -n 6 dispel4py mpi\ dispel4py.examples.graph_testing.pipeline_testNote

Each node in the graph is executed as a separate MPI process. This graph has 6 nodes. For this reason we need at least 6 MPI processes to execute it.

Output:

Processing 10 iterations. Processes: {'TestProducer0': [5], 'TestOneInOneOut5': [2], 'TestOneInOneOut4': [4], 'TestOneInOneOut3': [3], 'TestOneInOneOut2': [1], 'TestOneInOneOut1': [0]} TestProducer0 (rank 5): Processed 10 iterations. TestOneInOneOut1 (rank 0): Processed 10 iterations. TestOneInOneOut2 (rank 1): Processed 10 iterations. TestOneInOneOut3 (rank 3): Processed 10 iterations. TestOneInOneOut4 (rank 4): Processed 10 iterations. TestOneInOneOut5 (rank 2): Processed 10 iterations.STORM:

From the dispel4py directory launch the Storm submission client:

dispel4py storm dispel4py.examples.graph_testing.pipeline_test\ -m remoteOutput:

Spec'ing TestOneInOneOut1 Spec'ing TestOneInOneOut2 Spec'ing TestOneInOneOut3 Spec'ing TestOneInOneOut4 Spec'ing TestOneInOneOut5 Spec'ing TestProducer6 spouts {'TestProducer6': ... } bolts {'TestOneInOneOut5': ... } Created Storm submission package in /var/folders/58/7bjr3s011kgdtm5lx58prc_40000gn/T/tmp5ePEq3 Running: java -client -Dstorm.options= -Dstorm.home= ... Submitting topology 'TestTopology' to storm.example.com:6627 ...

-

dispel4py.examples.graph_testing.pipeline_test.testPipeline(graph)¶ Adds a pipeline to the given graph.

Return type: the created graph

dispel4py.examples.graph_testing.producer_tee module¶

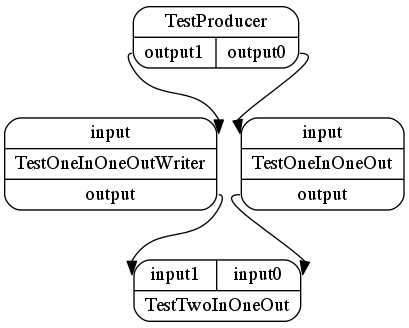

dispel4py.examples.graph_testing.split_merge module¶

This is a dispel4py graph which produces a workflow that splits the data and sends it to two nodes (cons1 and cons2) and the output of those two nodes is merged by another node (last).

It can be executed with MPI and STORM.

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py mpi <module|module file>\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When [-i number of interations/runs] is not indicated, the graph is executed once by default.

For example:

mpiexec -n 4 dispel4py mpi dispel4py.examples.graph_testing.split_merge

Note

Each node in the graph is executed as a separate MPI process. This graph has 4 nodes. For this reason we need at least 4 MPI processes to execute it.

Output:

Processing 1 iteration. Processes: {'TestProducer0': [1], 'TestOneInOneOutWriter2': [2], 'TestTwoInOneOut3': [0], 'TestOneInOneOut1': [3]} TestProducer0 (rank 1): Processed 1 iteration. TestOneInOneOut1 (rank 3): Processed 1 iteration. TestOneInOneOutWriter2 (rank 2): Processed 1 iteration. TestTwoInOneOut3 (rank 0): Processed 2 iterations.

-

dispel4py.examples.graph_testing.split_merge.testSplitMerge()¶ Creates the split/merge graph with 4 nodes.

Return type: the created graph

dispel4py.examples.graph_testing.teecopy module¶

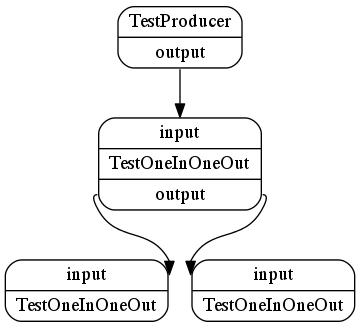

This is a dispel4py graph which produces a workflow that copies the data

(from node prod) to two nodes (cons1 and cons2).

If you compare this graph with grouping_onetoall

they look quite similar. However, they do different things.

In this example, the nodes cons1 and cons2 are different PE and the

node prod sends the same data to both PEs.

It can be executed with MPI and STORM.

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py mpi <module|module file>\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When [-i number of interations/runs] is not indicated, the graph is executed once by default.

For example:

mpiexec -n 3 dispel4py mpi dispel4py.examples.graph_testing.teecopy

Note

Each node in the graph is executed as a separate MPI process. This graph has 3 nodes. For this reason we need at least 3 MPI processes to execute it.

Output:

Processing 1 iteration. Processes: {'TestProducer0': [0], 'TestOneInOneOut2': [2], 'TestOneInOneOut1': [1]} TestProducer0 (rank 0): Processed 1 iteration. TestOneInOneOut2 (rank 2): Processed 1 iteration. TestOneInOneOut1 (rank 1): Processed 1 iteration.

-

dispel4py.examples.graph_testing.teecopy.testTee()¶ Creates a graph with two consumer nodes and a tee connection.

Return type: the created graph

dispel4py.examples.graph_testing.testing_PEs module¶

Example PEs for test workflows, implementing various patterns.

-

class

dispel4py.examples.graph_testing.testing_PEs.NumberProducer(numIterations=1)¶ Bases:

dispel4py.core.GenericPE-

process(inputs)¶

-

-

class

dispel4py.examples.graph_testing.testing_PEs.ProvenanceLogger¶ Bases:

dispel4py.core.GenericPE-

process(inputs)¶

-

-

class

dispel4py.examples.graph_testing.testing_PEs.RandomFilter¶ Bases:

dispel4py.core.GenericPEThis PE randomly filters the input.

-

input_name= 'input'¶

-

output_name= 'output'¶

-

process(inputs)¶

-

-

class

dispel4py.examples.graph_testing.testing_PEs.RandomWordProducer¶ Bases:

dispel4py.core.GenericPEThis PE produces a random word as an output.

-

process(inputs=None)¶

-

words= ['dispel4py', 'computing', 'mpi', 'processing', 'simple', 'analysis', 'data']¶

-

-

class

dispel4py.examples.graph_testing.testing_PEs.TestDelayOneInOneOut(delay=1)¶ Bases:

dispel4py.core.GenericPEThis PE outputs the input data.

-

process(inputs)¶

-

-

class

dispel4py.examples.graph_testing.testing_PEs.TestMultiProducer(num_output=10)¶ Bases:

dispel4py.core.GenericPE

-

class

dispel4py.examples.graph_testing.testing_PEs.TestOneInOneOut¶ Bases:

dispel4py.core.GenericPEThis PE outputs the input data.

-

process(inputs)¶

-

-

class

dispel4py.examples.graph_testing.testing_PEs.TestOneInOneOutWriter¶ Bases:

dispel4py.core.GenericPEThis PE copies the input to an output, but it uses the write method. Remember that the write function allows to produce more than one output block within one processing step.

-

process(inputs)¶

-

-

class

dispel4py.examples.graph_testing.testing_PEs.TestProducer(numOutputs=1)¶ Bases:

dispel4py.core.GenericPEThis PE produces a range of numbers

-

class

dispel4py.examples.graph_testing.testing_PEs.TestTwoInOneOut¶ Bases:

dispel4py.core.GenericPEThis PE takes two inputs and it merges the data into one output string.

-

process(inputs)¶

-

-

class

dispel4py.examples.graph_testing.testing_PEs.WordCounter¶ Bases:

dispel4py.core.GenericPEThis PE counts the number of times (counter) that it receives each word. And it produces as an output: the same word (the input) and its counter.

-

input_name= 'input'¶

-

output_name= 'output'¶

-

dispel4py.examples.graph_testing.unconnected_pipeline module¶

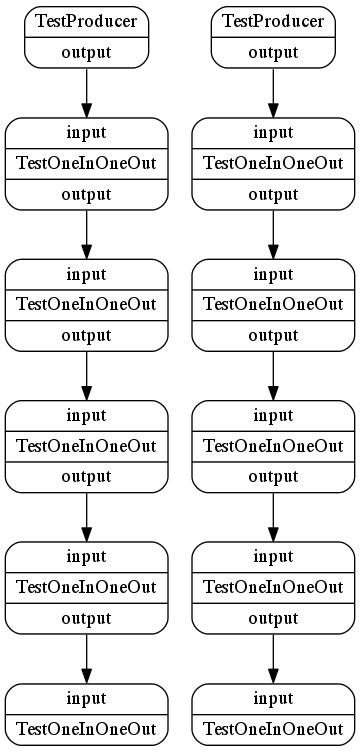

This is a dispel4py graph which produces two pipeline workflows which are unconnected.

It can be executed with MPI and STORM.

MPI: Please, locate yourself into the dispel4py directory.

Execute the MPI mapping as follows:

mpiexec -n <number mpi_processes> dispel4py mpi <module|module file>\ [-a name_dispel4py_graph]\ [-f file containing the input dataset in JSON format]\ [-i number of iterations/runs']\ [-s]The argument ‘-s’ forces to run the graph in a simple processing, which means that the first node of the graph will be executed in a process, and the rest of nodes will be executed in a second process. When [-i number of interations/runs] is not indicated, the graph is executed once by default.

For example:

mpiexec -n 12 dispel4py mpi \ dispel4py.examples.graph_testing.unconnected_pipelineNote

Each node in the graph is executed as a separate MPI process. This graph has 12 nodes. For this reason we need at least 12 MPI processes to execute it.

Output:

Processing 1 iteration. Processes: {'TestProducer0': [9], 'TestProducer6': [6], 'TestOneInOneOut9': [7], 'TestOneInOneOut8': [8], 'TestOneInOneOut7': [11], 'TestOneInOneOut5': [1], 'TestOneInOneOut4': [2], 'TestOneInOneOut3': [4], 'TestOneInOneOut2': [0], 'TestOneInOneOut1': [10], 'TestOneInOneOut11': [3], 'TestOneInOneOut10': [5]} TestProducer6 (rank 6): Processed 1 iteration. TestProducer0 (rank 9): Processed 1 iteration. TestOneInOneOut1 (rank 10): Processed 1 iteration. TestOneInOneOut7 (rank 11): Processed 1 iteration. TestOneInOneOut2 (rank 0): Processed 1 iteration. TestOneInOneOut8 (rank 8): Processed 1 iteration. TestOneInOneOut9 (rank 7): Processed 1 iteration. TestOneInOneOut10 (rank 5): Processed 1 iteration. TestOneInOneOut3 (rank 4): Processed 1 iteration. TestOneInOneOut4 (rank 2): Processed 1 iteration. TestOneInOneOut11 (rank 3): Processed 1 iteration. TestOneInOneOut5 (rank 1): Processed 1 iteration.

-

dispel4py.examples.graph_testing.unconnected_pipeline.testPipeline(graph)¶ Creates a pipeline and adds it to the given graph.

Return type: the modified graph

-

dispel4py.examples.graph_testing.unconnected_pipeline.testUnconnected()¶ Creates a graph with two unconnected pipelines.

Return type: the created graph

dispel4py.examples.graph_testing.word_count module¶

Counts words produced by a WordProducer.

dispel4py.examples.graph_testing.word_count_filter module¶

Counts words produced by RandomWordProducer and filtered by RandomFilter.

Module contents¶

Examples of dispel4py graphs.