Simulation with SimPy - In Depth Manual¶

| Authors: |

|

|---|---|

| SimPy release: | 2.2 |

| SimPy Web-site: | |

| Python-Version: | 2.3 and later (not 3.0) |

| Date: | September 27, 2011 |

Contents

This document describes SimPy version 2.2. Changes from the previous version are listed in Appendix A0.

Note

This document does not describe the object oriented (OO) API which has been added to SimPy with version 2.0. SimPy 2.0 is fully backward compatible with previous versions. The procedural API and the OO API co-exist happily in SimPy 2.x.

| [1] | The variable version, imported from SimPy.Simulation, contains the revision number and date of the current version. |

Introduction¶

SimPy is a Python-based discrete-event simulation system that models active components such as messages, customers, trucks, planes by parallel processes. It provides a number of tools for the simulation programmer including Processes to model active entities, three kinds of resource facilities (Resources, Levels, and Stores) and ways of recording results by using Monitors and Tallys.

The basic active elements of a SimPy model are process objects (i.e., objects of a Process class – see Processes). As a general practice and for brevity we will often refer to both process objects and their classes as “processes.” Thus, “process” may refer to a Process class or to a process object, depending on context. To avoid ambiguity or for added emphasis we often explicitly state whether a class or an object is intended. In addition we will use “entity” to refer to process objects as this is frequently used in the simulation literature. Here, though, we restrict it to process objects and it will not be used for any other elements in the simulation.

During the simulation, Process objects may be delayed for fixed or random times, queued at resource facilities, and may be interrupted by or interact in other ways with other processes and components. For example, Automobiles in a model of a gas station may have to queue while waiting for a pump to become available . One obtaining a pump it takes some time to fill before releasing the pump.

A SimPy script contains the declaration of one or more Process classes and the creation of process objects (entities) from them. Each process object executes its Process Execution Method (referred to later as a PEM), a method that determines its actions. Each PEM runs in parallel with (and may interact with) the PEMs of other process objects.

There are three types of resource facilities (Resources, Levels, and Stores). Each type models a congestion point where process objects may have to queue while waiting to acquire or, in some cases to deposit, a resource.

Resources have several resource units, each of which may be used by process objects. Extending the example above, the gas station might be modeled as a resource with its pumps as resource units. On receiving a request for a pump from a car, the gas station resource automatically queues waiting cars until one becomes available. The pump resource unit is held by the car until it is released for possible use by another car.

Levels model the supply and consumption of a homogeneous undifferentiated “material.” The Level at any time holds an amount of material that is fully described by a scalar (real or integer). This can be increased or decreased by process objects. For example, a gas (petrol) station stores gas in large storage tanks. The tanks can be increased by Tanker deliveries and reduced by cars refuelling. A car need not return the gas to the Level in contrast to the requirement for Resource units.

Stores model the production and consumption of individual items. A store hold a list of items. Process objects can insert or remove items from the list. For example, surgical procedures (treated as process objects) require specific lists of personnel and equipment that may be treated as the items in a Store facility such as a clinic or hospital. The items held in a Store can be of any Python type. In particular they can be process objects, and this may be exploited to facilitate modeling Master/Slave relationships.

In addition to the number of free units or quantities, resource facilities all hold queues of waiting process objects which are operated automatically by SimPy. They also operate a reneging mechanism so that a process object can abandon the wait.

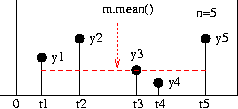

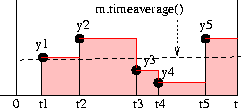

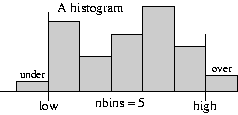

Monitors and Tallys are used to compile statistics as a function of time on variables such as waiting times and queue lengths. These statistics consist of simple averages and variances, time-weighted averages, or histograms. They can be gathered on the queues associated with Resources, Levels and Stores. For example we may collect data on the average number of cars waiting at a gas station and the distribution of their waiting times. Tallys update the current statistics as the simulation progresses, but cannot preserve complete time-series records. Monitors can preserve complete time-series records that may later be used for more advanced post-simulation analyses.

Before attempting to use SimPy, you should be able to write Python code. In particular, you should be able to define and use classes and their objects. Python is free and usable on most platforms. We do not expound it here. You can find out more about it and download it from the Python web-site (http://www.Python.org). SimPy requires Python 2.3 or later.

[Return to Top ]

Simulation with SimPy¶

To use the SimPy simulation system you must import its Simulation module (or one of the alternatives):

from SimPy.Simulation import *

All discrete-event simulation programs automatically maintain the current simulation time in a software clock. This cannot be changed by the user directly. In SimPy the current clock value is returned by the now() function.

At the start of the simulation the software clock is set to 0.0. While the simulation program runs, simulation time steps forward from one event to the next. An event occurs whenever the state of the simulated system changes. For example, an event might be the arrival or departure of a car from the gas station.

The following statement initializes global simulation variables and sets the software clock to zero. It must appear in the script before any SimPy process objects are activated.

initialize( )

This is followed by SimPy statements creating and activating process objects. Activation of process objects adds events to the simulation schedule. Execution of the simulation itself starts with the following statement:

simulate(until=endtime)

The simulation starts, and SimPy seeks and executes the first scheduled event. Having executed that event, the simulation seeks and executes the next event, and so on.

Typically a simulation is terminated when endtime is reached but it can be stopped at any time by the command:

stopSimulation( )

now( ) will then equal the time when this was called. The simulation will also stop if there are no more events to execute (so now() equals the time the last scheduled event occurred)

After the simulation has stopped, further statements can be executed. now() will retain the time of stopping and data held in Monitors will be available for display or further analysis.

The following fragment shows only the main block in a simulation program. (Complete, runnable examples are shown in Example 1 and Example 2). Here Message is a (previously defined) Process class and m is defined as an object of that class, that is, a particular message. Activating m has the effect of scheduling at least one event by starting the PEM of m (here called go). The simulate(until=1000.0) statement starts the simulation itself, which immediately jumps to the first scheduled event. It will continue until it runs out of events to execute or the simulation time reaches 1000.0. When the simulation stops the (previously written) Report function is called to display the results:

1 2 3 4 5 6 | initialize()

m = Message()

activate(m,m.go(),at=0.0)

simulate(until=1000.0)

Report() # report results when the simulation finishes

|

The object-oriented interface¶

An object-oriented API interface was added in SimPy 2.0. It is described more fully in SimPyOO_API. It defines a class of Simulation objects and makes running multiple simulations cleaner and easier. It is compatible with the procedural version described in this Manual. Using the object-oriented API, the program fragment listed at the end of the previous subsection would look like this:

1 2 3 4 5 6 7 | s=Simulation()

s.initialize()

m = Message(sim=s)

s.activate(m,m.go(),at=0.0)

s.simulate(until=1000.0)

Report() # report results when the simulation finishes

|

Further examples of the OO style exist in the SimPyModels directory and the Bank Tutorial.

Alternative SimPy simulation libraries¶

In addition to SimPy.Simulation, SimPy provides four alternative simulation libraries which have the basic SimPy.Simulation capabilities, plus additional facilities:

- SimPy.SimulationTrace for program tracing: With from SimPy.SimulationTrace import *, any SimPy program automatically generates detailed event-by-event tracing output. This makes the library ideal for program development/testing and for teaching SimPy.

- SimPy.SimulationRT for real time synchronization: from SimPy.SimulationRT import * facilitates synchronizing simulation time and real (wall-clock) time. This capability can be used to implement, e.g., interactive game applications or to demonstrate a model’s execution in real time.

- SimPy.SimulationStep for event-stepping through a simulation: The import from SimPy.SimulationStep import * provides an API for stepping through a simulation event by event. This can assist with debugging models, interacting with them on an event-by-event basis, getting event-by-event output from a model (e.g. for plotting purposes), etc.

- SimPy.SimulationGUIDebug for event-stepping through a simulation with a GUI: from SimPy.SimulationGUIDebug import * provides an API for stepping through a simulation event-by-event, with a GUI for user control. The event list, Process and Resource objects are shown in windows. This is useful for debugging models and for teaching discrete event simulation with SimPy.

[Return to Top ]

Processes¶

The active objects for discrete-event simulation in SimPy are process objects – instances of some class that inherits from SimPy’s Process class.

For example, if we are simulating a computing network we might model each message as an object of the class Message. When message objects arrive at the computing network they make transitions between nodes, wait for service at each one, are served for some time, and eventually leave the system. The Message class specifies all the actions of each message in its Process Execution Method (PEM). Individual message objects are created as the simulation runs, and their evolutions are directed by the Message class’s PEM.

Defining a process¶

Each Process class inherits from SimPy’s Process class. For example the header of the definition of a new Message Process class would be:

class Message(Process):

At least one Process Execution Method (PEM) must be defined in each Process class [1]. A PEM may have arguments in addition to the required self argument that all methods must have. Naturally, other methods and, in particular, an __init__ method, may be defined.

| [2] | More than one can be defined but only one can be executed by any process object. |

A Process Execution Method (PEM) defines the actions that are performed by its process objects. Each PEM must contain at least one of the yield statements, described later. This makes it a Python generator function so that it has resumable execution – it can be restarted again after the yield statement without losing its current state. A PEM may have any name of your choice. For example it may be called execute( ) or run( ).

“The yield statements are simulation commands which affect an ongoing life-cycle of Process objects. These statements control the execution and synchronization of multiple processes. They can delay a process, put it to sleep, request a shared resource or provide a resource. They can add new events on the simulation event schedule, cancel existing ones, or cause processes to wait for a state change.”

For example, here is a the Process Execution Method, go(self), for the Message class. Upon activation it prints out the current time, the message object’s identification number and the word “Starting”. After a simulated delay of 100.0 time units (in the yield hold, ... statement) it announces that this message object has “Arrived”:

def go(self): print now(), self.i, 'Starting' yield hold,self,100.0 print now(), self.i, 'Arrived'

A process object’s PEM starts execution when the object is activated, provided the simulate(until= ...) statement has been executed.

__init__(self, ...), where ... indicates method arguments. This method initializes the process object, setting values for some or all of its attributes. As for any sub-class in Python, the first line of this method must call the Process class’s __init__( ) method in the form:

Process.__init__(self)

You can then use additional commands to initialize attributes of the Process class’s objects. You can also override the standard name attribute of the object.

The __init__( ) method is always called whenever you create a new process object. If you do not wish to provide for any attributes other than a name, the __init__ method may be dispensed with. An example of an __init__( ) method is shown in the example below.

Creating a process object¶

An entity (process object) is created in the usual Python manner by calling the class. Process classes have a single argument, name which can be specified if no __init__ method is defined. It defaults to 'a_process'. It can be over-ridden if an __init__ method is defined.

For example to create a new Message object with a name Message23:

m = Message(name="Message23")

Note

When working through this and all other SimPy manuals, the reader is encouraged to type in, run and experiment with all examples as she goes. No better way of learning exists than doing! A suggestion: if you want to see how a SimPy model is being executed, trace it by replacing from SimPy.Simulation import * with from SimPy.SimulationTrace import *. Any Python environment is suitable – an interactive Python session, IDLE, IPython, Scite . . .

Example 1: This is is a complete, runnable, SimPy script. We declare a Message class and define an __init__( ) method and a PEM called go( ). The __init__( ) method provide an instance variables of an identification number and message length. We do not actually use the len attribute in this example.

Two messages, p1 and p2 are created. p1 and p2 are activated to start at simulation times 0.0 and 6.0, respectively. Nothing happens until the simulate(until=200) statement. When both messages have finished (at time 6.0+100.0=106.0) there will be no more events so the simulation will stop at that time:

Running this program gives the following output:

0 1 Starting 6.0 2 Starting 100.0 1 Arrived 106.0 2 Arrived Current time is 106.0

Elapsing time in a Process¶

A PEM uses the yield hold command to temporarily delay a process object’s operations.

yield hold¶

yield hold,self,t

Causes the process object to delay t time units [2]. After the delay, it continues with the next statement in its PEM. During the hold the object’s operations are suspended.

| [3] | unless it is further delayed by being interrupted. This is used to model any elapsed time an entity might be involved in. For example while it is passively being provided with service. |

Example 2: In this example the Process Execution Method, buy, has an extra argument, budget:

Starting and stopping SimPy Process Objects¶

A process object is “passive” when first created, i.e., it has no scheduled events. It must be activated to start its Process Execution Method. To activate an instance of a Process class you can use either the activate function or the start method of the Process. (see the Glossary for an explanation of the modified Backus-Naur Form (BNF) notation used).

activate¶

activate(p, p.pemname([args])[,{at=now()|delay=0}][,prior=False])

activates process object p, provides its Process Execution Method p.pemname( ) with arguments args and possibly assigns values to the other optional parameters. The default is to activate at the current time (at=now( )) with no delay (delay=0.0) and prior set to False. You may assign other values to at, delay, and prior.

Example: to activate a process object, cust with name cust001 at time 10.0 using a PEM called lifetime:

activate(cust,cust.lifetime(name='cust001'),at=10.0)

However, delay overrides at, in the sense that when a delay=period clause is included, then activation occurs at now( ) or now( )+period (whichever is larger), irrespective of what value of t is assigned in the at=t clause. This is true even when the value of period in the delay clause is zero, or even negative. So it is better and clearer to choose one (or neither) of at=t and delay=period, but not both.

If you set prior=True, then process object p will be activated before any others that happen to be scheduled for activation at the same time. So, if several process objects are scheduled for activation at the same time and all have prior=True, then the last one scheduled will actually be the first to be activated, the next-to-last of those scheduled, the second to be activated, and so forth.

Retroactive activations that attempt to activate a process object before the current simulation time terminate the simulation with an error report.

start¶

An alternative to activate() function is the start method. There are a number of ways of using it:

p.start(p.pemname([args])[,{at=now()|delay=0}][,prior=False])

is an alternative to the activate statement. p is a Process object. The generator function, pemname, can have any identifier (such as run, life-cycle, etc). It can have parameters.

For example, to activate the process object cust using the PEM with identifier, lifetime at time 10.0 we would use:

cust.start(cust.lifetime(name='cust001'),at=10.0)

p.start([p.ACTIONS()] [,{at=now()|delay=0}][,prior=False])

if p is a Process object and the generator function is given the standard identifier, ACTIONS. ACTIONS, is recognized as a Process Execution Method. It may not have parameters. The call p.ACTIONS() is optional.

For example, to activate the process object cust with the standard PEM identifier ACTIONS at time 10.0, the following are equivalent (and the second version is more convenient):

cust.start(cust.ACTIONS(), at=10.0) cust.start(at=10.0)

An anonymous instance of Process class PR can be created and activated in one command using start with the standard PEM identifier, ACTIONS.

PR.([args]).start( [,{at=now()|delay=0}][,prior=False])

Here, PR is the identifier for the Process class and not for a Process object as was p, in the statements above. The generator method ACTIONS may not have parameters.

For example, if Customer is a SimPy Process class we can create and activate an anonymous instance at time 10.0:

Customer(name='cust001').start(at=10.0)

You can use the passivate, reactivate, or cancel commands to control Process objects.

passivate¶

yield passivate,self

suspends the process object itself. It becomes “passive”. To get it going again another process must reactivate it.

reactivate¶

reactivate(p[,{at=now()|delay=0}][,prior=False])

reactivates a passive process object, p. It becomes “active”. The optional parameters work as for activate. A process object cannot reactivate itself. To temporarily suspend itself it must use yield hold,self,t instead.

cancel¶

self.cancel(p)

deletes all scheduled future events for process object p. A process cannot cancel itself. If that is required, use yield passivate,self instead. Only “active” process objects can be canceled.

A process object is “terminated” after all statements in its process execution method have been completed. If the object is still referenced by a variable, it becomes just a data container. This can be useful for extracting information. Otherwise, it is automatically destroyed.

Even activated process objects will not start operating until the simulate(until=endtime) statement is executed. This starts the simulation going and it will continue until time endtime (unless it runs out of events to execute or the command stopSimulation( ) is executed).

Example 3 This simulates a firework with a time fuse. We have put in a few extra yield hold commands for added suspense.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | from SimPy.Simulation import *

class Firework(Process):

def execute(self):

print now(), ' firework launched'

yield hold,self, 10.0 # wait 10.0 time units

for i in range(10):

yield hold,self,1.0

print now(), ' tick'

yield hold,self,10.0 # wait another 10.0 time units

print now(), ' Boom!!'

initialize()

f = Firework() # create a Firework object, and

# activate it (with some default parameters)

activate(f,f.execute(),at=0.0)

simulate(until=100)

|

Here is the output. No formatting was attempted so it looks a bit ragged:

0.0 firework launched

11.0 tick

12.0 tick

13.0 tick

14.0 tick

15.0 tick

16.0 tick

17.0 tick

18.0 tick

19.0 tick

20.0 tick

30.0 Boom!!

A source fragment¶

One useful program pattern is the source. This is a process object with a Process Execution Method (PEM) that sequentially generates and activates other process objects – it is a source of other process objects. Random arrivals can be modeled using random intervals between activations.

Example 4: A source. Here a source creates and activates a series of customers who arrive at regular intervals of 10.0 units of time. This continues until the simulation time exceeds the specified finishTime of 33.0. (Of course, to model customers with random inter-arrival times the yield hold statement would use a random variate, such as expovariate( ), instead of the constant 10.0 inter-arrival time used here.) The following example assumes that the Customer class has previously been defined with a PEM called run that does not require any arguments:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | class Source(Process):

def execute(self, finish):

while now() < finish:

c = Customer() # create a new customer object, and

# activate it (using default parameters)

activate(c,c.run())

print now(), ' customer'

yield hold,self,10.0

initialize()

g = Source() # create the Source object, g,

# and activate it

activate(g,g.execute(finish=33.0),at=0.0)

simulate(until=100)

|

Asynchronous interruptions¶

An active process object can be interrupted by another but cannot interrupt itself.

interrupt¶

self.interrupt(victim)

The interrupter process object uses its interrupt method to interrupt the victim process object. The interrupt is just a signal. After this statement, the interrupter process object continues its PEM.

For the interrupt to have an immediate effect, the victim process object must be active – that is it must have an event scheduled for it (that is, it is “executing” a yield hold ). If the victim is not active (that is, it is either passive or terminated) the interrupt has no effect. For example, process objects queuing for resource facilities cannot be interrupted because they are passive during their queuing phase.

If interrupted, the victim returns from its yield hold statement prematurely. It must then check to see if it has been interrupted by calling:

interrupted¶

self.interrupted( )

which returns True if it has been interrupted. The victim can then either continue in the current activity or switch to an alternative, making sure it tidies up the current state, such as releasing any resources it owns.

interruptCause¶

self.interruptCause

when the victim has been interrupted, self.interruptCause is a reference to the interrupter object.

interruptLeft¶

self.interruptLeft

gives the time remaining in the interrupted yield hold. The interruption is reset (that is, “turned off”) at the victim’s next call to a yield hold.

interruptReset¶

self.interruptReset( )

will reset the interruption.

It may be helpful to think of an interruption signal as instructing the victim to determine whether it should interrupt itself. If the victim determines that it should interrupt itself, it then becomes responsible for making any necessary readjustments – not only to itself but also to any other simulation components that are affected. (The victim must take responsibility for these adjustments, because it is the only simulation component that “knows” such details as whether or not it is interrupting itself, when, and why.)

Example 5. A simulation with interrupts. A bus is subject to breakdowns that are modeled as interrupts caused by a Breakdown process. Notice that the yield hold,self,tripleft statement may be interrupted, so if the self.interrupted() test returns True a reaction to it is required. Here, in addition to delaying the bus for repairs, the reaction includes scheduling the next breakdown. In this example the Bus Process class does not require an __init__() method:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | from SimPy.Simulation import *

class Bus(Process):

def operate(self,repairduration,triplength): # PEM

tripleft = triplength

# "tripleft" is the driving time to finish trip

# if there are no further breakdowns

while tripleft > 0:

yield hold,self,tripleft # try to finish the trip

# if a breakdown intervenes

if self.interrupted():

print self.interruptCause.name, 'at %s' %now()

tripleft=self.interruptLeft

# update driving time to finish

# the trip if no more breakdowns

self.interruptReset() # end self-interrupted state

# update next breakdown time

reactivate(br,delay=repairduration)

# impose delay for repairs on self

yield hold,self,repairduration

print 'Bus repaired at %s' %now()

else: # no breakdowns intervened, so bus finished trip

break

print 'Bus has arrived at %s' %now()

class Breakdown(Process):

def __init__(self,myBus):

Process.__init__(self,name='Breakdown '+myBus.name)

self.bus=myBus

def breakBus(self,interval): # Process Execution Method

while True:

yield hold,self,interval # driving time between breakdowns

if self.bus.terminated(): break

# signal "self.bus" to break itself down

self.interrupt(self.bus)

initialize()

b=Bus('Bus') # create a Bus object "b" called "Bus"

activate(b,b.operate(repairduration=20,triplength=1000))

# create a Breakdown object "br" for bus "b", and

br=Breakdown(b)

# activate it with driving time between

# breakdowns equal to 300

activate(br,br.breakBus(300))

simulate(until=4000)

print 'SimPy: No more events at time %s' %now()

|

The output from this example:

Breakdown Bus at 300

Bus repaired at 320

Breakdown Bus at 620

Bus repaired at 640

Breakdown Bus at 940

Bus repaired at 960

Bus has arrived at 1060

SimPy: No more events at time 1260

The bus finishes at 1060 but the simulation finished at 1260. Why? The breakdowns PEM consists of a loop, one breakdown following another at 300 intervals. The last breakdown finishes at 960 and then a breakdown event is scheduled for 1260. But the bus finished at 1060 and is not affected by the breakdown. These details can easily be checked by importing from SimPy.SimulationTrace and re-running the program.

Where interrupts can occur, the victim of interrupts must test for interrupt occurrence after every appropriate yield hold and react appropriately to it. A victim holding a resource facility when it gets interrupted continues to hold it.

Advanced synchronization/scheduling capabilities¶

The preceding scheduling constructs all depend on specified time values. That is, they delay processes for a specific time, or use given time parameters when reactivating them. For a wide range of applications this is all that is needed.

However, some applications either require or can profit from an ability to activate processes that must wait for other processes to complete. For example, models of real-time systems or operating systems often use this kind of approach. Event Signalling is particularly helpful in such situations. Furthermore, some applications need to activate processes when certain conditions occur, even though when (or if) they will occur may be unknown. SimPy has a general wait until to support clean implementation of this approach.

This section describes how SimPy provides event Signalling and wait until capabilities.

Creating and Signalling SimEvents¶

As mentioned in the Introduction, for ease of expression when no confusion can arise we often refer to both process objects and their classes as “processes”, and mention their object or class status only for added clarity or emphasis. Analogously, we will refer to objects of SimPy’s SimEvent class as “SimEvents” [3] (or, if no confusion can arise, simply as “events”). However, we sometimes mention their object or class character for clarity or emphasis.

| [4] | The name SimEvent was chosen because “event” is already used in Python’s standard library. See Python Library Reference section 7.5 threading – Higher-level threading interface, specifically subsection 7.5.5. |

SimEvent objects must be created before they can be fired by a signal. You create the SimEvent object, sE, from SimPy’s SimEvent class by a statement like the following:

sE = SimEvent(name='I just had a great new idea!')

A SimEvent’s name attribute defaults to a_SimEvent unless you provide your own, as shown here. Its occurred attribute, sE.occurred, is a Boolean that defaults to False. It indicates whether the event sE has occurred.

You program a SimEvent to “occur” or “fire” by “signaling” it like this:

sE.signal(<payload parameter>)

This “signal” is “received” by all processes that are either “waiting” or “queueing” for this event to occur. What happens when they receive this signal is explained in the next section. The <payload parameter> is optional – it defaults to None. It can be of any Python type. Any process can retrieve it from the event’s signalparam attribute, for example by:

message = sE.signalparam

Waiting or Queueing for SimEvents¶

You can program a process either to “wait” or to “queue” for the occurrence of SimEvents. The difference is that all processes “waiting” for some event are reactivated as soon as it occurs. For example, all firemen go into action when the alarm sounds. In contrast, only the first process in the “queue” for some event is reactivated when it occurs. That is, the “queue” is FIFO [5]. An example might be royal succession – when the present ruler dies: “The king is dead. Long live the (new) king!” (And all others in the line of succession move up one step.)

| [5] | (1, 2, 3) “First-in-First-Out” or FCFS, “First-Come-First-Served” |

You program a process to wait for SimEvents by including in its PEM:

yield waitevent¶

yield waitevent,self,<events part>

where <events part> can be either:

- one SimEvent object, e.g. myEvent, or

- a tuple of SimEvent objects, e.g. (myEvent,myOtherEvent,TimeOut), or

- a list of SimEvent objects, e.g. [myEvent,myOtherEvent,TimeOut]

If none of the events in the <events part> have occurred, the process is passivated and joined to the list of processes waiting for some event in <events part> to occur (or to recur).

On the other hand, when any of the events in the <events part> occur, then all of the processes “waiting” for those particular events are reactivated at the current time. Then the occurred flag of those particular events is reset to False. Resetting their occurred flag prevents the waiting processes from being constantly reactivated. (For instance, we do not want firemen to keep responding to any such “false alarms.”) For example, suppose the <events part> lists events a, b and c in that order. If events a and c occur, then all of the processes waiting for event a are reactivated. So are all processes waiting for event c but not a. Then the occurred flags of events a and c are toggled to False. No direct changes are made to event b or to any processes waiting for it to occur.

You program a process to “queue” for events by including in its PEM:

yield queueevent¶

yield queueevent,self,<events part>

where the <events part> is as described above.

If none of the events in the <events part> has occurred, the process is passivated and appended to the FIFO queue of processes queuing for some event in <events part> to occur (or recur).

But when any of the events in <events part> occur, the process at the head of the “queue” is taken off the queue and reactivated at the current time. Then the occurred flag of those events that occurred is reset to False as in the “waiting” case.

Finding Which Processes Are Waiting/Queueing for an Event, and Which Events Fired¶

SimPy automatically keeps current lists of what processes are “waiting” or “queueing” for SimEvents. They are kept in the waits and queues attributes of the SimEvent object and can be read by commands like the following:

TheProcessesWaitingFor_myEvent = myEvent.waits

TheProcessesQueuedFor_myEvent = myEvent.queues

However, you should not attempt to change these attributes yourself.

Whenever myEvent occurs, i.e., whenever a myEvent.signal(...) statement is executed, SimPy does the following:

- If there are any processes waiting or queued for that event, it reactivates them as described in the preceding section.

- If there are no processes waiting or queued (i.e., myEvent.waits and myEvent.queues are both empty), it toggles myEvent.occurred to True.

SimPy also automatically keeps track of which events were fired when a process object was reactivated. For example, you can get a list of the events that were fired when the object Godzilla was reactivated with a statement like this:

GodzillaRevivedBy = Godzilla.eventsFired

Example 6. This complete SimPy script illustrates these constructs. (It also illustrates that a Process class may have more than one PEM. Here the Wait_Or_Queue class has two PEMs – waitup and queueup.):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 | from SimPy.Simulation import *

class Wait_Or_Queue(Process):

def waitup(self,myEvent): # PEM illustrating "waitevent"

# wait for "myEvent" to occur

yield waitevent, self, myEvent

print 'At %s, some SimEvent(s) occurred that \

activated object %s.' %(now(), self.name)

print ' The activating event(s) were %s' \

%([x.name for x in self.eventsFired])

def queueup(self, myEvent): # PEM illustrating "queueevent"

# queue up for "myEvent" to occur

yield queueevent, self, myEvent

print 'At %s, some SimEvent(s) occurred that \

activated object %s.' %(now(), self.name)

print ' The activating event(s) were %s' \

%([x.name for x in self.eventsFired])

class Signaller(Process):

# here we just schedule some events to fire

def sendSignals(self):

yield hold, self, 2

event1.signal() # fire "event1" at time 2

yield hold, self, 8

event2.signal() # fire "event2" at time 10

yield hold, self, 5

event1.signal() # fire all four events at time 15

event2.signal()

event3.signal()

event4.signal()

yield hold, self, 5

event4.signal() # event4 recurs at time 20

initialize()

# Now create each SimEvent and give it a name

event1 = SimEvent('Event-1')

event2 = SimEvent('Event-2')

event3 = SimEvent('Event-3')

event4 = SimEvent('Event-4')

Event_list = [event3,event4] # define an event list

s = Signaller()

# Activate Signaller "s" *after* events created

activate (s,s.sendSignals())

w0 = Wait_Or_Queue('W-0')

# create object named "W-0", and set it to

# "waitup" for SimEvent "event1" to occur

activate (w0, w0.waitup(event1))

w1 = Wait_Or_Queue('W-1')

activate (w1, w1.waitup(event2))

w2 = Wait_Or_Queue('W-2')

activate(w2, w2.waitup(Event_list))

q1 = Wait_Or_Queue('Q-1')

# create object named "Q-1", and put it to be first

# in the queue for Event_list to occur

activate(q1, q1.queueup(Event_list))

q2 = Wait_Or_Queue('Q-2')

# create object named "Q-2", and append it to

# the queue for Event_list to occur

activate(q2, q2.queueup(Event_list))

simulate(until=50)

|

This program outputs:

At 2, some SimEvent(s) occurred that activated object W-0.

The activating event(s) were ['Event-1']

At 10, some SimEvent(s) occurred that activated object W-1.

The activating event(s) were ['Event-2']

At 15, some SimEvent(s) occurred that activated object W-2.

The activating event(s) were ['Event-3']

At 15, some SimEvent(s) occurred that activated object Q-1.

The activating event(s) were ['Event-3', 'Event-4']

At 20, some SimEvent(s) occurred that activated object Q-2.

The activating event(s) were ['Event-4']

Each output line, The activating event(s) were ..., lists the contents of the named object’s eventsFired attribute. One of those events “caused” the object to reactivate at the indicated time. Note that at time 15 objects W-0 and W-1 were not affected by the recurrence of event1 and event2 because they already were active. Also at time 15, even though objects W-2, Q-1 and Q-2 were all waiting for event3, only W-2 and Q-1 were reactivated. Process object Q-2 was not reactivated at that time because it was not first in the queue. Finally, Q-2 was reactivated at time 20, when event4 fired again.

“waituntil” synchronization – waiting for any condition¶

SimPy provides the waituntil feature that makes a process’s progress depend on the state of the simulation. This is useful if, for example, you need to reactivate a process when (if ever) the simulation enters the state goodWeather OR (nrCustomers>50 AND price<22.50). Doing that requires interrogative scheduling, while all other SimPy synchronization constructs are imperative – i.e., the condition must be tested after every change in state until it becomes True.

This requires that after every change in system state SimPy must run a special (hidden) process that tests and responds appropriately to the condition’s truth-value. This clearly takes more run time than SimPy’s imperative scheduling constructs. So SimPy activates its interrogative testing process only so long as at least one process is executing a waituntil statement. When this is not the case, the run time overhead is minimal (about 1 percent extra run time).

yield waituntil¶

You program a process to wait for a condition to be satisfied by including in its PEM a statement of the form:

yield waituntil, self,<cond>

where <cond> is a reference to a function, without parameters, that returns a Boolean value indicating whether the simulation state or condition to be waited for has occurred.

Example 7. This program using the yield waituntil ... statement. Here the function killed(), in the life() PEM of the Player process, defines the condition to be waited for

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | from SimPy.Simulation import *

import random

class Player(Process):

def __init__(self,lives=1,name='ImaTarget'):

Process.__init__(self,name)

self.lives=lives

# provide Player objects with a "damage" property

self.damage=0

def life(self):

self.message='Drat! Some %s survived Federation attack!' %(target.name)

def killed(): # function testing for "damage > 5"

return self.damage>5

while True:

yield waituntil,self,killed

self.lives-=1; self.damage=0

if self.lives==0:

self.message= '%s wiped out by Federation at \

time %s!' %(target.name,now())

stopSimulation()

class Federation(Process):

def fight(self): # simulate Federation operations

print 'Three %s attempting to escape!' %(target.name)

while True:

if random.randint(0,10)<2: # check for hit on player

target.damage+=1 # hit! increment damage to player

if target.damage <= 5: # target survives

print 'Ha! %s hit! Damage = %i'%(target.name, target.damage)

else:

if (target.lives-1)==0:

print 'No more %s left!' %(target.name)

else:

print 'Now only %i %s left!' %(target.lives-1,target.name)

yield hold,self,1

initialize()

gameOver=100

# create a Player object named "Romulans"

target=Player(lives=3,name='Romulans')

activate(target,target.life())

# create a Federation object

shooter=Federation()

activate(shooter,shooter.fight())

simulate(until=gameOver)

print target.message

|

One possible output from this program is shown below. Whether the Romulans are wiped out or some escape depends on what simulation states the randomization feature produces:

Three Romulans attempting to escape!

Ha! Romulans hit! Damage = 1

Ha! Romulans hit! Damage = 2

Ha! Romulans hit! Damage = 3

Ha! Romulans hit! Damage = 4

Ha! Romulans hit! Damage = 5

Now only 2 Romulans left!

Ha! Romulans hit! Damage = 1

Ha! Romulans hit! Damage = 2

Ha! Romulans hit! Damage = 3

Ha! Romulans hit! Damage = 4

Ha! Romulans hit! Damage = 5

Now only 1 Romulans left!

Ha! Romulans hit! Damage = 1

Ha! Romulans hit! Damage = 2

Ha! Romulans hit! Damage = 3

Ha! Romulans hit! Damage = 4

Ha! Romulans hit! Damage = 5

No more Romulans left!

Romulans wiped out by Federation at time 73!

The waituntil construct is so general that in principle it could replace all the other synchronization approaches (but at a run time cost).

[Return to Top ]

Resources¶

The three resource facilities provided by SimPy are Resources, Levels and Stores. Each models a congestion point where process objects may have to queue up to obtain resources. This section describes the Resource type of resource facility.

An example of queueing for a Resource might be a manufacturing plant in which a Task (modeled as a process object) needs work done by a Machine (modeled as a Resource object). If all of the Machines are currently being used, the Task must wait until one becomes free. A SimPy Resource can have a number of identical units, such as a number of identical machine units. A process obtains a unit of the Resource by requesting it and, when it is finished, releasing it. A Resource maintains a list of process objects that have requested but not yet received one of the Resource’s units (called the waitQ), and another list of processes that are currently using a unit (the activeQ). SimPy creates and updates these queues itself – the user can access them, but should not change them.

Defining a Resource object¶

A Resource object, r, is established by the following statement:

r = Resource(capacity=1, name='a_resource', unitName='units',

qType=FIFO, preemptable=False,

monitored=False, monitorType=Monitor)

where

- capacity is a positive real or integer value that specifies the total number of identical units in Resource object r.

- name is a descriptive name for this Resource object (e.g., 'gasStation').

- unitName is a descriptive name for a unit of the resource (e.g., 'pump').

- qType is either FIFO [5] or PriorityQ. It specifies the queue discipline of the resource’s waitQ; typically, this is FIFO and that is the default value. If PriorityQ is specified, then higher-priority requests waiting for a unit of Resource r are inserted into the waitQ ahead of lower priority requests. See Priority requests for a Resource unit for details.

- preemptable is a Boolean (False or True); typically, this is False and that is the default value. If it is True, then a process requesting a unit of this resource may preempt a lower-priority process in the activeQ, i.e., one that is already using a unit of the resource. See Preemptive requests for a Resource unit for details.

- monitored is a boolean (False or True). If set to True, then information is gathered on the sizes of r‘s waitQ and activeQ, otherwise not.

- monitorType is either Monitor or Tally and indicates the type of Recorder to be used (see Recording Resource queue lengths for an example and additional discussion).

Each Resource object, r, has the following additional attributes:

- r.n, the number of units that are currently free.

- r.waitQ, a queue (list) of processes that have requested but not yet received a unit of r, so len(r.waitQ) is the number of process objects currently waiting.

- r.activeQ, a queue (list) of process objects currently using one of the Resource’s units, so len(r.activeQ) is the number of units that are currently in use.

- r.waitMon, the record (made by a Monitor or a Tally whenever monitored==True) of the activity in r.waitQ. So, for example, r.waitMon.timeaverage() is the average number of processes in r.waitQ. See Recording Resource queue lengths for an example.

- r.actMon, the record (made by a Monitor or a Tally whenever monitored==True) of the activity in r.activeQ.

Requesting and releasing a unit of a Resource¶

A process can request and later release a unit of the Resource object, r, by using the following yield commands in a Process Execution Method:

yield request¶

yield request,self,r [,P=0]

requests a unit of Resource r with (optional) real or integer priority value P. If no priority is specified, it defaults to 0. Larger values of P represent higher priorities. See the following sections on Queue Order for more information on how priority values are used. Although this form of request can be used for either FIFO or PriorityQ priority types, these values are ignored when qType==FIFO.

yield release¶

yield release,self,r

releases the unit of r.

Queue Order¶

If a requesting process must wait it is placed into the resource’s waitQ in an order determined by settings of the resource’s qType and preemptable attributes and of the priority value it uses in the request call.

Non-priority queueing¶

If the qType is not specified it takes the presumed value of FIFO [5]. In that case processes wait in the usual first-come-first-served order.

If a Resource unit is free when the request is made, the requesting process takes it and moves on to the next statement in its PEM. If no Resource unit is available when the request is made, then the requesting process is appended to the Resource’s waitQ and suspended. The next time a unit becomes available the first process in the r.waitQ takes it and continues its execution. All priority assignments are ignored. Moreover, in the FIFO case no preemption is possible, for preemption requires that priority assignments be recognized. (However, see the Note on preemptive requests with waitQ in FIFO order for one way of simulating such situations.)

Example In this complete script, the server Resource object is given two resource units (capacity=2). By not specifying its Qtype it takes the default value, FIFO. Here six clients arrive in the order specified by the program. They all request a resource unit from the server Resource object at the same time. Even though they all specify a priority value in their requests, it is ignored and they get their Resource units in the same order as their requests:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | from SimPy.Simulation import *

class Client(Process):

inClients=[] # list the clients in order by their requests

outClients=[] # list the clients in order by completion of service

def __init__(self,name):

Process.__init__(self,name)

def getserved(self,servtime,priority,myServer):

Client.inClients.append(self.name)

print self.name, 'requests 1 unit at t =',now()

# request use of a resource unit

yield request, self, myServer, priority

yield hold, self, servtime

# release the resource

yield release, self, myServer

print self.name,'done at t =',now()

Client.outClients.append(self.name)

initialize()

# the next line creates the ``server`` Resource object

server=Resource(capacity=2) # server defaults to qType==FIFO

# the next lines create some Client process objects

c1=Client(name='c1') ; c2=Client(name='c2')

c3=Client(name='c3') ; c4=Client(name='c4')

c5=Client(name='c5') ; c6=Client(name='c6')

# in the next lines each client requests

# one of the ``server``'s Resource units

activate(c1,c1.getserved(servtime=100,priority=1,myServer=server))

activate(c2,c2.getserved(servtime=100,priority=2,myServer=server))

activate(c3,c3.getserved(servtime=100,priority=3,myServer=server))

activate(c4,c4.getserved(servtime=100,priority=4,myServer=server))

activate(c5,c5.getserved(servtime=100,priority=5,myServer=server))

activate(c6,c6.getserved(servtime=100,priority=6,myServer=server))

simulate(until=500)

print 'Request order: ',Client.inClients

print 'Service order: ',Client.outClients

|

This program results in the following output:

c1 requests 1 unit at t = 0

c2 requests 1 unit at t = 0

c3 requests 1 unit at t = 0

c4 requests 1 unit at t = 0

c5 requests 1 unit at t = 0

c6 requests 1 unit at t = 0

c1 done at time = 100

c2 done at time = 100

c3 done at time = 200

c4 done at time = 200

c5 done at time = 300

c6 done at time = 300

Request order: ['c1', 'c2', 'c3', 'c4', 'c5', 'c6']

Service order: ['c1', 'c2', 'c3', 'c4', 'c5', 'c6']

As illustrated, the clients are served in FIFO order. Clients c1 and c2 each take one Resource unit right away, but the others must wait. When c1 and c2 finish with their resources, clients c3 and c4 can each take a unit, and so forth.

Priority requests for a Resource unit¶

If the Resource r is defined with qType==PriorityQ, priority values in requests are recognized. If a Resource unit is available when the request is made, the requesting process takes it. If no Resource unit is available when the request is made, the requesting process is inserted into the Resource’s waitQ in order of priority (from high to low) and suspended. For an example where priorities are used, we simply change the preceding example’s specification of the server Resource object to:

server=Resource(capacity=2, qType=PriorityQ)

where, by not specifying it, we allow preemptable to take its default value, False.

Example After this change the program’s output becomes:

c1 requests 1 unit at t = 0

c2 requests 1 unit at t = 0

c3 requests 1 unit at t = 0

c4 requests 1 unit at t = 0

c5 requests 1 unit at t = 0

c6 requests 1 unit at t = 0

c1 done at time = 100

c2 done at time = 100

c6 done at time = 200

c5 done at time = 200

c4 done at time = 300

c3 done at time = 300

Request order: ['c1', 'c2', 'c3', 'c4', 'c5', 'c6']

Service order: ['c1', 'c2', 'c6', 'c5', 'c4', 'c3']

Although c1 and c2 have the lowest priority values, each requested and got a server unit immediately. That was because at the time they made those requests a server unit was available and the server.waitQ was empty – it did not start to fill until c3 made its request and found all of the server units busy. When c1 and c2 completed service, c6 and c5 (with the highest priority values of all processes in the waitQ) each got a Resource unit, etc.

When some processes in the waitQ have the same priority level as a process making a priority request, SimPy inserts the requesting process immediately behind them. Thus for a given priority value, processes are placed in FIFO order. For example, suppose that when a “priority 3” process makes its priority request the current waitQ consists of processes with priorities [5,4,3a,3b,3c,2a,2b,1], where the letters indicate the order in which the equal-priority processes were placed in the queue. Then SimPy inserts this requesting process into the current waitQ immediately behind its last “priority 3” process. Thus, the new waitQ will be [5,4,3a,3b,3c,3d,2a,2b,1], where the inserted process is 3d.

One consequence of this is that, if all priority requests are assigned the same priority value, then the waitQ will in fact be maintained in FIFO order. In that case, using a FIFO instead of a PriorityQ discipline provides some saving in execution time which may be important in simulations where the waitQ may be long.

Preemptive requests for a Resource unit¶

In some models, higher priority processes can actually preempt lower priority processes, i.e., they can take over and use a Resource unit currently being used by a lower priority process whenever no free Resource units are available. A Resource object that allows its units to be preempted is created by setting its properties to qType==PriorityQ and preemptable==True.

Whenever a preemptable Resource unit is free when a request is made, then the requesting process takes it and continues its execution. On the other hand, when a higher priority request finds all the units in a preemptable Resource in use, then SimPy adopts the following procedure regarding the Resource’s activeQ and waitQ:

- The process with the lowest priority is removed from the activeQ, suspended, and put at the front of the waitQ – so (barring additional preemptions) it will be the next one to get a resource unit.

- The preempting process gets the vacated resource unit and is inserted into the activeQ in order of its priority value.

- The time for which the preempted process had the resource unit is taken into account when the process gets into the activeQ again. Thus, its total hold time is always the same, regardless of how many times it has been preempted.

Warning: SimPy only supports preemption of processes which are implemented in the following pattern:

yield request (one or more request statements)

<some code>

yield hold (one or more hold statements)

<some code>

yield release (one or more release statements)

Modeling the preemption of a process in any other pattern may lead to errors or exceptions.

We emphasize that a process making a preemptive request to a fully-occupied Resource gets a resource unit if – but only if – some process in the current activeQ has a lower priority. Otherwise, it will be inserted into the waitQ at a location determined by its priority value and the current contents of the waitQ, using a procedure analogous to that described for priority requests near the end of the preceding section on Priority requests for a Resource unit. This may have the effect of advancing the preempting process ahead of any lower-priority processes that had earlier been preempted and put at the head of the waitQ. In fact, if several preemptions occur before a unit of resource is freed up, then the head of the waitQ will consist of the processes that have been preempted – in order from the last process preempted to the first of them.

Example In this example two clients of different priority compete for the same resource unit:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | from SimPy.Simulation import *

class Client(Process):

def __init__(self,name):

Process.__init__(self,name)

def getserved(self,servtime,priority,myServer):

print self.name, 'requests 1 unit at t=',now()

yield request, self, myServer, priority

yield hold, self, servtime

yield release, self,myServer

print self.name,'done at t= ',now()

initialize()

# create the *server* Resource object

server=Resource(capacity=1,qType=PriorityQ,preemptable=1)

# create some Client process objects

c1=Client(name='c1')

c2=Client(name='c2')

activate(c1,c1.getserved(servtime=100,priority=1,myServer=server),at=0)

activate(c2,c2.getserved(servtime=100,priority=9,myServer=server),at=50)

simulate(until=500)

|

The output from this program is:

c1 requests 1 unit at t= 0

c2 requests 1 unit at t= 50

c2 done at t= 150

c1 done at t= 200

Here, c1 is preempted by c2 at t=50. At that time, c1 had held the resource for 50 of its total of 100 time units. When c2 finished and released the resource unit at 150, c1 got the resource back and finished the last 50 time units of its service at t=200.

If preemption occurs when the last few processes in the current activeQ have the same priority value, then the last process in the current activeQ is the one that will be preempted and inserted into the waitQ ahead of all others. To describe this, it will be convenient to indicate by an added letter the order in which equal-priority processes have been inserted into a queue. Now, suppose that a “priority 4” process makes a preemptive request when the current activeQ priorities are [5,3a,3b] and the current waitQ priorities are [2,1,0a,0b]. Then process 3b will be preempted. After the preemption the activeQ will be [5,4,3a] and the waitQ will be [3b,2,1,0a,0b].

Note on preemptive requests with waitQ in FIFO order¶

You may consider doing the following to model a system whose queue of items waiting for a resource is to be maintained in FIFO order, but in which preemption is to be possible. It uses SimPy’s preemptable Resource objects, and uses priorities in a way that allows for preempts while maintaining a FIFO waitQ order.

- Set qType=PriorityQ and preemptable=True (so that SimPy will process preemptive requests correctly).

- Model “system requests that are to be considered as non-preemptive” in SimPy as process objects each of which has exactly the same (low) priority value – for example, either assign all of them a priority value of 0 (zero) or let it default to that value. (This has the effect of maintaining all of these process objects in the waitQ in FIFO order, as explained at the end of the section on Priority requests for a Resource unit, above.)

- Model “system requests that are to be considered as preemptive” in SimPy as process objects each of which is assigned a uniform priority value, but give them a higher value than the one used to model the “non-preemptive system requests” – for example, assign all of them a priority value of 1 (one). Then they will have a higher priority value than any of the non-preemptive requests.

Example Here is an example of how this works for a Resource with two Resource units – we give the activeQ before the waitQ throughout this example:

- Suppose that the current activeQ and waitQ are [0a,0b] and [0c], respectively.

- A “priority 1” process makes a preemptive request. Then the queues become: [1a,0a] and`` [0b,0c]``.

- Another “priority 1” process makes a preemptive request. Then the queues become: [1a,1b] and [0a,0b,0c].

- A third “priority 1” process makes a preemptive request. Then the queues become: [1a,1b] and [1c,0a,0b,0c].

- Process 1a finishes using its resource unit. Then the queues become: [1b,1c] and [0a,0b,0c].

Reneging – leaving a queue before acquiring a resource¶

In most real world situations, people and other items do not wait forever for a requested resource facility to become available. Instead, they leave its queue when their patience is exhausted or when some other condition occurs. This behavior is called reneging, and the reneging person or thing is said to renege.

SimPy provides an extended (i.e., compound) yield request statement to handle reneging.

Reneging yield request¶

There are two types of reneging clause, one for reneging after a certain time and one for reneging when an event has happened. Their general form is

yield (request,self,r [,P]),(<reneging clause>)

to request a unit of Resource r (with optional priority P, assuming the Resource has been defined as a priorityQ) but with reneging.

A SimPy program that models Resource requests with reneging must use the following pattern of statements:

yield (request,self,r),(<reneging clause>)

if self.acquired(resource):

## process got resource and so did NOT renege

. . . .

yield release,self,resource

else:

## process reneged before acquiring resource

. . . . .

A call to the self.acquired(resource) method is mandatory after a compound yield request statement. It not only indicates whether or not the process has acquired the resource, it also removes the reneging process from the resource’s waitQ.

Reneging after a time limit¶

To make a process give up (renege) after a certain time, use a reneging clause of the following form:

yield (request,self,r [,P]),(hold,self,waittime)

Here the process requests one unit of the resource r with optional priority P. If a resource unit is available it takes it and continues its PEM. Otherwise, as usual, it is passivated and inserted into r‘s waitQ.

The process takes a unit if it becomes available before waittime expires and continues executing its PEM. If, however, the process has not acquired a unit before the waittime has expired it abandons the request (reneges) and leaves the waitQ.

Example: part of a parking lot simulation:

. . . .

parking_lot=Resource(capacity=10)

patience=5 # wait no longer than "patience" time units

# for a parking space

park_time=60 # park for "park_time" time units if get a parking space

. . . .

yield (request,self,parking_lot),(hold,self,patience)

if self.acquired(parking_lot):

# park the car

yield hold,self,park_time

yield release,self,parking_lot

else:

# patience exhausted, so give up

print 'I'm not waiting any longer. I am going home now.'

Reneging when an event has happened¶

To make a process renege at the occurrence of an event, use a reneging clause having a pattern like the one used for a yield waitevent statement, namely waitevent,self,events (see yield waitevent). For example:

yield (request,self,r [,P]),(waitevent,self,events)

Here the process requests one unit of the resource r with optional priority P. If a resource unit is available it takes it and continues its PEM. Otherwise, as usual, it is passivated and inserted into r‘s waitQ.

The process takes a unit if it becomes available before any of the events occur, and continues executing its PEM. If, however, any of the SimEvents in events occur first, it abandons the request (reneges) and leaves the waitQ. (Recall that events can be either one event, a list, or a tuple of several SimEvents.)

Example Queuing for movie tickets (part):

. . . .

seats=Resource(capacity=100)

sold_out=SimEvent() # signals "out of seats"

too_late=SimEvent() # signals "too late for this show"

. . . .

# Leave the ticket counter queue when movie sold out

# or it is too late for the show

yield (request,self,seats),(waitevent,self,[sold_out,too_late])

if self.acquired(seats):

# watch the movie

yield hold,self,120

yield release,self,seats

else:

# did not get a seat

print 'Who needs to see this silly movie anyhow?'

Exiting conventions and preemptive queues¶

Many discrete event simulations (including SimPy) adopt the normal “exiting convention”, according to which processes that have once started using a Resource unit stay in some Resource queue until their hold time has completed. This is of course automatically the case for FIFO and non-preemptable PriorityQ disciplines. The point is that the exiting convention is also applied in the preemptable queue discipline case. Thus, processes remain in some Resource queue until their hold time has completed, even if they are preempted by higher priority processes.

Some real-world situations conform to this convention and some do not. An example of one that does conform can be described as follows. Suppose that at work you are assigned tasks of varying levels of priority. You are to set aside lower priority tasks in order to work on higher priority ones. But you are eventually to complete all of your assigned tasks. So you are operating like a SimPy resource that obeys a preemptable queue discipline and has one resource unit. With this convention, half-finished low-priority tasks may be postponed indefinitely if they are continually preempted by higher-priority tasks.

An example that does not conform to the exiting convention can be described as follows. Suppose again that you are assigned tasks of varying levels of priority and are to set aside lower priority tasks to work on higher priority ones. But you are instructed that any tasks not completed within 24 hours after being assigned are to be sent to another department for completion. Now, suppose that you are assigned Task-A that has a priority level of 3 and will take 10 hours to complete. After working on Task-A for an hour, you are assigned Task-B, which has a priority level of 5 and will take 20 hours to complete. Then, at 11 hours, after working on Task-B for 10 hours, you are assigned Task-C, which has a priority level of 1 and will take 4 hours to complete. (At this point Task-B needs 10 hours to complete, Task-A needs 9 hours to complete, and Task-C needs 4 hours to complete.) At 21 hours you complete Task-B and resume working on Task-A, which at that point needs 9 hours to complete. At 24 hours Task-A still needs another 6 hours to complete, but it has reached the 24-hour deadline and so is sent to another department for completion. At the same time, Task-C has been in the waitQ for 13 hours, so you take it up and complete it at hour 28. This queue discipline does not conform to the exiting convention, for under that convention at 24 hours you would continue work on Task-A, complete it at hour 30, and then start on Task-C.

Recording Resource queue lengths¶

Many discrete event models are used mainly to explore the statistical properties of the waitQ and activeQ associated with some or all of their simulated resources. SimPy’s support for this includes the Monitor and the Tally. For more information on these and other recording methods, see the section on Recording Simulation Results.

If a Resource, r, is defined with monitored=True SimPy automatically records the length of its associated waitQ and activeQ. These records are kept in the recorder objects called r.waitMon and r.actMon, respectively. This solves a problem, particularly for the waitQ which cannot easily be recorded externally to the resource.

The property monitorType indicates which variety of recorder is to be used, either Monitor or Tally. The default is Monitor. If this is chosen, complete time series for both queue lengths are maintained and can be used for advanced post-simulation statistical analyses as well as for displaying summary statistics (such as averages, standard deviations, and histograms). If Tally is chosen summary statistics can be displayed, but complete time series cannot. For more information on these and SimPy’s other recording methods, see the section on Recording Simulation Results.

Example The following program uses a Monitor to record the server resource’s queues. After the simulation ends, it displays some summary statistics for each queue, and then their complete time series:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | from SimPy.Simulation import *

from math import sqrt

class Client(Process):

inClients=[]

outClients=[]

def __init__(self,name):

Process.__init__(self,name)

def getserved(self,servtime,myServer):

print self.name, 'requests 1 unit at t =',now()

yield request, self, myServer

yield hold, self, servtime

yield release, self, myServer

print self.name,'done at t =',now()

initialize()

server=Resource(capacity=1,monitored=True,monitorType=Monitor)

c1=Client(name='c1') ; c2=Client(name='c2')

c3=Client(name='c3') ; c4=Client(name='c4')

activate(c1,c1.getserved(servtime=100,myServer=server))

activate(c2,c2.getserved(servtime=100,myServer=server))

activate(c3,c3.getserved(servtime=100,myServer=server))

activate(c4,c4.getserved(servtime=100,myServer=server))

simulate(until=500)

print

print '(TimeAverage no. waiting:',server.waitMon.timeAverage()

print '(Number) Average no. waiting:',server.waitMon.mean()

print '(Number) Var of no. waiting:',server.waitMon.var()

print '(Number) SD of no. waiting:',sqrt(server.waitMon.var())

print '(TimeAverage no. in service:',server.actMon.timeAverage()

print '(Number) Average no. in service:',server.actMon.mean()

print '(Number) Var of no. in service:',server.actMon.var()

print '(Number) SD of no. in service:',sqrt(server.actMon.var())

print '='*40

print 'Time history for the "server" waitQ:'

print '[time, waitQ]'

for item in server.waitMon:

print item

print '='*40

print 'Time history for the "server" activeQ:'

print '[time, activeQ]'

for item in server.actMon:

print item

|

The output from this program is:

c1 requests 1 unit at t = 0

c2 requests 1 unit at t = 0

c3 requests 1 unit at t = 0

c4 requests 1 unit at t = 0

c1 done at t = 100

c2 done at t = 200

c3 done at t = 300

c4 done at t = 400

(Time) Average no. waiting: 1.5

(Number) Average no. waiting: 1.5

(Number) Var of no. waiting: 0.916666666667

(Number) SD of no. waiting: 0.957427107756

(Time) Average no. in service: 1.0

(Number) Average no. in service: 0.5

(Number) Var of no. in service: 0.25

(Number) SD of no. in service: 0.5

========================================

Time history for the 'server' waitQ:

[time, waitQ]

[0, 1]

[0, 2]

[0, 3]

[100, 2]

[200, 1]

[300, 0]

========================================

Time history for the 'server' activeQ:

[time, activeQ]

[0, 1]

[100, 0]

[100, 1]

[200, 0]

[200, 1]

[300, 0]

[300, 1]

[400, 0]

This output illustrates the difference between the (Time) Average and the number statistics. Here process c1 was in the waitQ for zero time units, process c2 for 100 time units, and so forth. The total wait time accumulated by all four processes during the entire simulation run, which ended at time 400, amounts to 0 + 100 + 200 + 300 = 600 time units. Dividing the 600 accumulated time units by the simulation run time of 400 gives 1.5 for the (Time) Average number of processes in the waitQ. It is the time-weighted average length of the waitQ, but is almost always called simply the average length of the waitQ or the average number of items waiting for a resource.

It is also the expected number of processes you would find in the waitQ if you took a snapshot of it at a random time during the simulation. The activeQ‘s time average computation is similar, although in this example the resource is held by some process throughout the simulation. Even though the number in the activeQ momentarily drops to zero as one process releases the resource and immediately rises to one as the next process acquires it, that occurs instantaneously and so contributes nothing to the (Time) Average computation.

Number statistics such as the Average, Variance, and SD are computed differently. At time zero the number of processes in the waitQ starts at 1, then rises to 2, and then to 3. At time 100 it drops back to two processes, and so forth. The average and standard deviation of the six values [1, 2, 3, 2, 1, 0] is 1.5 and 0.9574..., respectively. Number statistics for the activeQ are computed using the eight values [1, 0, 1, 0, 1, 0, 1, 0] and are as shown in the output.

When the monitorType is changed to Tally, all the output up to and including the lines:

Time history for the 'server' waitQ:

[time, waitQ]

is displayed. Then the output concludes with an error message indicating a problem with the reference to server.waitMon. Of course, this is because Tally does not generate complete time series.

[Return to Top ]

Levels¶

The three resource facilities provided by the SimPy system are Resources, Levels and Stores. Each models a congestion point where process objects may have to queue up to obtain resources. This section describes the Level type of resource facility.

Levels model the production and consumption of a homogeneous undifferentiated “material.” Thus, the currently-available amount of material in a Level resource facility can be fully described by a scalar (real or integer). Process objects may increase or decrease the currently-available amount of material in a Level facility.

For example, a gasoline station stores gas (petrol) in large tanks. Tankers increase, and refueled cars decrease, the amount of gas in the station’s storage tanks. Both getting amounts and putting amounts may be subjected to reneging like requesting amounts from a Resource.

Defining a Level¶

You define the Level resource facility lev by a statement like this:

lev = Level(name='a_level', unitName='units',

capacity='unbounded', initialBuffered=0,

putQType=FIFO, getQType=FIFO,

monitored=False, monitorType=Monitor)

where

- name (string type) is a descriptive name for the Level object lev is known (e.g., 'inventory').

- unitName (string type) is a descriptive name for the units in which the amount of material in lev is measured (e.g., 'kilograms').

- capacity (positive real or integer) is the capacity of the Level object lev. The default value is set to 'unbounded' which is interpreted as sys.maxint.

- initialBuffered (positive real or integer) is the initial amount of material in the Level object lev.

- putQType (FIFO or PriorityQ) is the (producer) queue discipline.

- getQType (FIFO or PriorityQ) is the (consumer) queue discipline.

- monitored (boolean) specifies whether the queues and the amount of material in lev will be recorded.

- monitorType (Monitor or Tally) specifies which type of Recorder to use. Defaults to Monitor.

Every Level resource object, such as lev, also has the following additional attributes:

- lev.amount is the amount currently held in lev.

- lev.putQ is the queue of processes waiting to add amounts to lev, so len(lev.putQ) is the number of processes waiting to add amounts.

- lev.getQ is the queue of processes waiting to get amounts from lev, so len(lev.getQ) is the number of processes waiting to get amounts.

- lev.monitored is True if the queues are to be recorded. In this case lev.putQMon, lev.getQMon, and lev.bufferMon exist.

- lev.putQMon is a Recorder observing lev.putQ.

- lev.getQMon is a Recorder observing lev.getQ.

- lev.bufferMon is a Recorder observing lev.amount.

Getting amounts from a Level¶

Processes can request amounts from a Level and the same or other processes can offer amounts to it.

A process, the requester, can request an amount ask from the Level resource object lev by a yield get statement.:

- yield get,self,lev,ask[,P]

Here ask must be a positive real or integer (the amount) and P is an optional priority value (real or integer). If lev does not hold enough to satisfy the request (that is, ask > lev.amount) the requesting process is passivated and queued (in lev.getQ) in order of its priority. Subject to the priority order, it will be reactivated when there is enough to satisfy the request.

self.got holds the amount actually received by the requester.

Putting amounts into a Level¶

A process, the offerer, which is usually but not necessarily different from the requester, can offer an amount give to a Level, lev, by a yield put statement:

- yield put,self,lev,give[,P]

Here give must be a positive real or integer, and P is an optional priority value (real or integer). If the amount offered would lead to an overflow (that is, lev.amount + give > lev.capacity) the offering process is passivated and queued (in lev.putQ). Subject to the priority order, it will be reactivated when there is enough space to hold the amount offered.

The orderings of processes in a Level’s getQ and putQ behave like those described for the waitQ under Resources, except that they are not preemptable. Thus, priority values are ignored when the queue type is FIFO. Otherwise higher priority values have higher priority, etc.

Example. Suppose that a random demand on an inventory is made each day. Each requested amount is distributed normally with a mean of 1.2 units and a standard deviation of 0.2 units. The inventory (modeled as an object of the Level class) is refilled by 10 units at fixed intervals of 10 days. There are no back-orders, but a accumulated sum of the total stock-out quantities is to be maintained. A trace is to be printed out each day and whenever there is a stock-out:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | from SimPy.Simulation import *

from random import normalvariate,seed